I am doing some work on the DSL book right now, and I run into this example, which is simple too delicious not to post about.

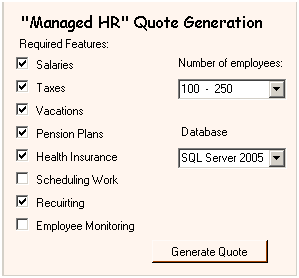

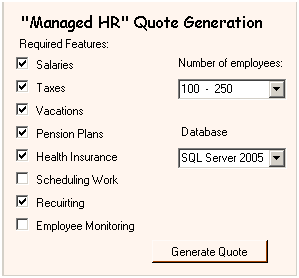

Assume that you have the following UI, which you use to let a salesperson generate a quote for your system.

This is much more than just a UI issue, to be clear. You have fully fledged logic system here. Calculating the total cost is the easy part, first you have to understand what you need.

Let us define a set of rules for the application, is will be clearer when we have the list in front of us:

- The Salary module requires a machine per every 150 users.

- The Taxes module requires a machine per 50 users.

- The Vacations module requires the Scheduling Work module.

- The Vacations module requires the External Connections module.

- The Pension Plans module requires the External Connections module.

- The Pension Plans module must be on the same machine as the Health Insurance module.

- The Health Insurance module requires the External Connections module.

- The Recruiting module requires a connection to the internet, and therefore requires a fire wall of the recommended list.

- The Employee Monitoring module requires the CompMonitor component

Of course, this fictitious sample is still too simple, we can probably sit down and come up with fifty or so more rules that we need to handle. Just handling the second level dependencies (External Connections, CompMonitor, etc) would be a big task, for example.

Assume that you have not a single such system, but 50 of them. I know of a company that spent 10 years and has 100,000 lines of C++ code (that implements a poorly performing Lisp machine, of course) to solve this issue.

My solution?

specification @vacations:

requires @scheduling_work

requires @external_connections

specification @salary:

users_per_machine 150

specification @taxes:

users_per_machine 50

specification @pension:

same_machine_as @health_insurance

Why do we need a DSL for this? Isn’t this a good candidate for data storage system? It seems to me that we could have expressed the same ideas with XML (or a database, etc) just as easily. Here is the same concept, now express in XML.

<specification name="vacation">

<requires name="scheduling_work"/>

<requires name="external_connections"/>

</specification>

<specification name="salary">

<users_per_machine value="150"/>

</specification>

<specification name="taxes">

<users_per_machine value="50"/>

</specification>

<specification name="pension">

<same_machine_as name="health_insurance"/>

</specification>

That is a one to one translation of the two, why do I need a DSL here?

Personally, I think that the DSL syntax is nicer, and the amount of work to get from a DSL to the object model is very small compared to the work required to translate to the same object model from XML.

That is mostly a personal opinion, however. For pure declarative DSL, we are comparable with XML in almost all things. It gets interesting when we decide that we don’t want this purity. Let us add a new rule to the mix, shall we?

- The Pension Plans module must be on the same machine as the Health Insurance module, if the user count is less than 500.

- The Pension Plans module requires distributed messaging backend, if the user count is great than 500.

Trying to express that in XML can be a real pain. In fact, it means that we are trying to shove programming concepts into the XML, which is always a bad idea. We could try to put this logic in the quote generation engine, but that is complicating it with no good reason, tying it to the specific application that we are using, and in general making a mess.

Using our DSL (with no modification needed), we can write it:

specification @pension:

if information.UserCount < 500:

same_machine_as @health_insurance

else:

requires @distributed_messaging_backend

As you can imagine, once you have run all the rules in the DSL, you are left with a very simple problem to solve, with all the parameters well known.

In fact, throughout the process, there isn't a single place of overwhelming complexity.

I like that.