For some reason, the moment that peole start working on Enterprise Solutions, there is a... tendency to assume that because we are going to build a big and complex application, we can afford to ignore best practices and proven practices.

For some reason, the moment that peole start working on Enterprise Solutions, there is a... tendency to assume that because we are going to build a big and complex application, we can afford to ignore best practices and proven practices.

That is just wrong. And it applies in many manners to many parts of the application, but it never applies to something as obiviously as it applies to the database.

This is just the results of a very short research:

- The metadata driven approach, AKA the 5 tables DB

- The Blob

- The 5th normalized form DB

- The 5th denormalized form DB

- The table a day DB

- The obsfuscated database - create table tbl15 (fld_a int, fld_b int, fld_g nvarchar(255) )

And those are just of the database structure, we haven't yet arrived to the database behavior yet. Here we have interesting approachs such as rolling your own "Transactions" table, requiring 40 joins to get a simple value, using nvarchar as the ultimate extensibility, etc.

So, how do we approach building a database that we can actually show in public? Let us start with thinking about the constraints that we have for the application. The database needs to support...

- OLTP for the application itself.

- Reports.

- Performance.

- ETL processes to take data in and out of the sytem.

- Large amount of data.

- Large amount of entities.

Notice how we don't have any suggestion about integrating with the application at the database level. That is a fairly common antipattern.

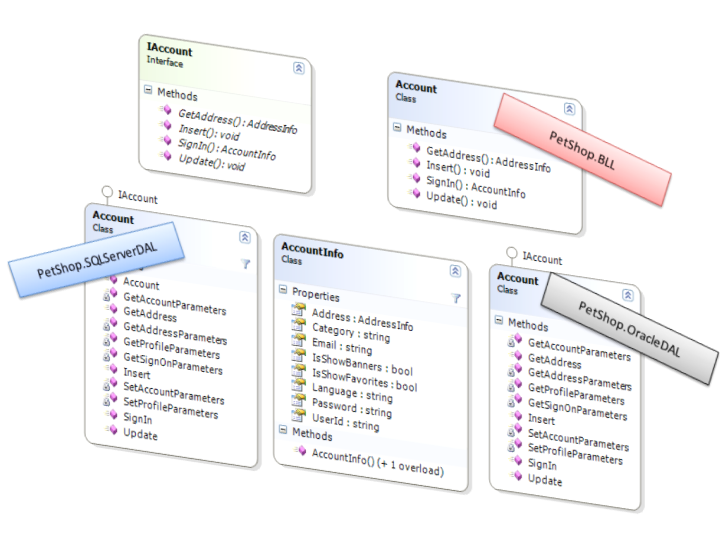

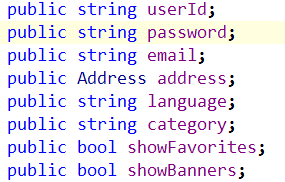

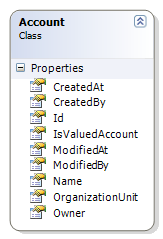

We are going to try to keep a one to one mapping between the entity and the table structure, because that will make it significantly easier to work with the system. One to one means that the Account Name translate to an Account.Name in the entity model, and Accounts.Name in the database model.

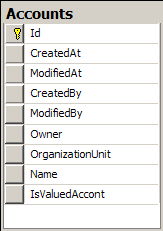

We probably want the usual auditing suspects, such as Id, CreatedAt, ModifiedAt, CreatedBy, ModifiedBy, Owner, OrganizationUnit. Maybe a few more, if this makes sense. Probably not too much, because that can get to be a problem.

We probably want the usual auditing suspects, such as Id, CreatedAt, ModifiedAt, CreatedBy, ModifiedBy, Owner, OrganizationUnit. Maybe a few more, if this makes sense. Probably not too much, because that can get to be a problem.

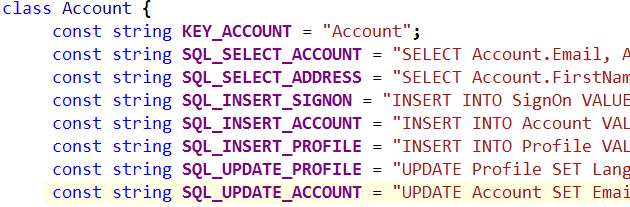

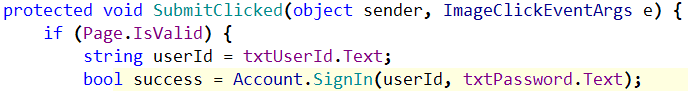

Accessing and manipulating this in the application layer it going to be trivial, so I am not really going to cover that in depth.

What I do want to talk about is how we expose it to the outside world? And by that I mean reports and ETL processes.

We have several options to try to do that. We can just let the reports and ETL processes to read from the database tables directly. This is the simplest approach, I think.

Other options include views, stored procedures, and other DB thingies that we can use. I have seen systems where an entity was composed of several tables, and the report was done off of a view that joined it all together.

The underlying problem is that I have versioning to consider. I am going to dedicate a full post to the problem of building upgradable user customizable applications, so I'll delay it to then, but let us just say that radically changing the database schema between version will be painful for the users. The usual way to handle that is to only make promises for the views, and not for the tables themselves. That is a good way to handle that, in my opinion.

I would suggest putting those in a separate schema, to make it clearer that the seperation is imortant. This also gives you the ability to later do a view to table refactoring, and maintain that with triggers. This is usually a bad idea, but for performance reasons, it may very well be a good solution.

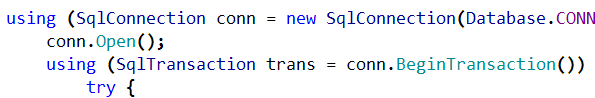

ETL processes can use the same mechanism that reports use to read data from the database efficently, but nothing writes to the database except the application. At one point I wrote a change DB password util that run every two hours, it would change the database password and update the relevant config files.

I think that you can guess what the driving force to that where, no?

Getting data into the database can be done through application services (not neccesarily web services, btw). A simple example would be API similar to this one:

void Insert(params Account[] accounts); void Update(params Account[] accounts); void Delete(params Account[] accounts);

This API explicitly allows for bulk operations, so it can be very nice to work with, instead of having to do things on per-row basis, which basically kills performance.

How to get good performance from this system is another hard question. In this case, I would usually recommend on getting a good DBA to look at the perfrormance charactaristics of the application and optimize the database structure if needed. But, a much easier solution to performance problems in the database server is to not hit the DB server, but use caching. Distributed caching solutions, like Memcached, NCache, etc are really good way of handling that.

No business logic in the DB! This is important, if you put business logic in the DB, you have to get to the DB in order to execute the business logic. This kills scalablity, hurts the ability to understand the solution, and in general makes life miserable all around.

Reports are an interesting problem. How do you deal with security, for instance? Consider the security infrastructure that I already presented. This security infrastructure should also come with a database function that you can use like this:

SELECT * FROM Accounts

WHERE IsAllowed(Accounts.EntitySecurityKey, @UserId, 'Account.View')

Or this:

SELECT * FROM Accounts WHERE Accounts.EntitySecurityKey IN ( SELECT EntitySecurityId FROM GetAllowed(@UserId, 'Account.View') )

Both of which provides really easy manner to get security for the reports. If we wanted to enforce that, we can force the report writer to write somtehing like this:

SELECT * FROM GetAllowedAccounts(@UserId, 'Account.View')

We can probably get away with assuming that 'Account.View' is the default operation, so it is even shorter. Among other things, this actually have valid performance characteristics.

This post it turning out to be a "don't make stupid mistakes" post, because I don't think that I am writing anything new here. About how to avoid making stupid mistake, that is fairly simple as well. Get a good DBA (that is left as an excersize for the reader), give him/her a big stick and encourage good design through superior firepower.