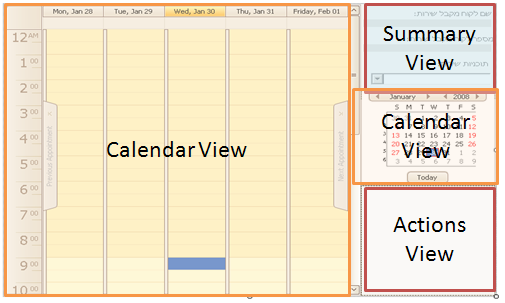

I am trying to think of a way to make this view work. It is a single view that contains more views, each of them responsible for a subset of the functionality in the view. This is the first time that I am dealing with smart client architecture for a long time.

It is surprising to see how much the web affect my thinking. I am having trouble dealing with the stateful nature of things.

Anyway, the problem that I have here is that I want to have a controller with the following constructor:

public class AccountController { public AccountController( ICalendarView calendarView, ISummaryView summaryView, IActionView actionView) { } }

The problem is that those views are embedded inside a parent view. And since those sub views are both views and controls inside the parent view, I can't think of a good way to deal with both of them at once.

I think that I am relying on the designer more than is healthy for me. I know how to solve the issue, break those into three views and build them at runtime, I just... hesitate before doing this. Although I am not really sure why.

Another option is to register the view instances when the parent view loads, but that means that the parent view has to know about the IoC container, and that makes me more uncomfortable.

It is a bit more of a problem when I consider that there are two associated calendar views, which compose a single view.

Interesting problem.

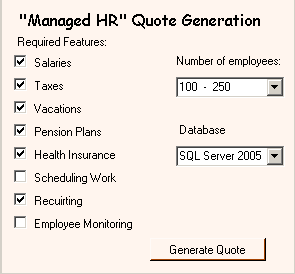

Let us assume that we need to build a quote generation program. This mean that we need to generate a quote out of the customer desires and the system requirements.

The customer's desires can be expressed in this UI:

The system requirements are:

- The Salary module is specification is a machine per every 150 users.

- The Taxes module requires a machine per 50 users.

- The Vacations module requires the Scheduling Work module.

- The Vacations module requires the External Connections module.

- The Pension Plans module requires the External Connections module.

- The Pension Plans module must be on the same machine as the Health Insurance module.

- The Health Insurance module requires the External Connections module.

- The Recruiting module requires a connection to the internet, and therefore requires a fire wall of the recommended list.

- The Employee Monitoring module requires the CompMonitor component

The first DSL that I wrote for it looked like this:

if has( "Vacations" ): add "Scheduling" number_of_machines["Salary"] = (user_count % 150) +1 number_of_machines["Taxes"] = (user_count % 50) +1

But this looked like a really bad idea, so I turned to a purely declarative approach, like this one:

specification @vacations: requires @scheduling_work requires @external_connections specification @salary: users_per_machine 150 specification @taxes: users_per_machine 50 specification @pension: same_machine_as @health_insurance

The problem with this approach is that I wonder, is this really something that you need a DSL for? You can do the same using XML very easily.

The advantage of a DSL is that we can put logic in it, so we can also do something like:

specification @pension: if user_count < 500: same_machine_as @health_insurance else: requires @distributed_messaging_backend requires @health_insurance

Which would be much harder to express in XML.

Of course, then we are not in the purely declarative DSL anymore.

Thoughts?

Here is a tidbit that I worked on yesterday for the DSL book:

operation "/account/login" if Principal.IsInRole("Administrators"): Allow("Administrators can always log in") return if date.Now.Hour < 9 or date.Now.Hour > 17: Deny("Cannot log in outside of business hours, 09:00 - 17:00")

And another one:

if Principal.IsInRole("Managers"): Allow("Managers can always approve orders") return if Entity.TotalCost >= 10_000: Deny("Only managers can approve orders of more than 10,000") Allow("All users can approve orders less than 10,000")

There is no relation to Rhino Security, just to be clear.

I simply wanted a sample for a DSL, and this seems natural enough.

I am looking at getting a new laptop. This is something that should serve as a development machine, and I am getting tired of waiting for the computer to do its work. As such, I intend to invest in a high end machine, but I am still considering which and what.

Minimum Requirements are 4GB RAM, Dual Core, Fast HD, big screen.

Video doesn't interest me, since even the low end ones are more than I would ever want. Weight is also not an issue, I would be perfectly happy with a laptop that came with its own wheelbarrow.

I am seriously thinking about getting a laptop with a solid state drive, and I am beginning to wonder if quad core is worth the price. Then again, I am currently writing dream checks for it, which is why I feel like I can go wild with all the features.

Any recommendations?

I am going to give a talk about the high end usages of OR/M and what it can do to an application design.

I have about one hour for this, and plenty topics. I am trying to think about what topics are both interesting and important to be included.

Here are some of the topics that I am thinking about:

- Partial Domain Models

- Persistent Specifications

- Googlize your domain model

- Integration with IoC containers

- Taking polymorphism up a notch - adaptive domain models

- Aspect Orientation

- Future Queries

- Scaling up and out

- Distributed Caching

- Shards

- Extending the functionality with listeners

- Off the side reporting

- Queries as Business Logic

- Cross Cutting Query Enhancement

- Filters

- Dealing with temporal domains

Anything that you like? Anything that you would like to me to talk about that isn't here?

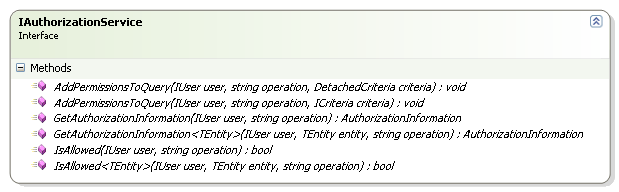

When I thought about Rhino Security, I imagine it with a single public interface that had exactly three methods:

- IsAllowed

- AddPermissionsToQuery

- Why

When I sat down and actually wrote it, it turned out to be quite different. Turn out that you usually want to handle editing permissions, not just check permissions. The main interface that you'll deal with is usually IAuthorizationService:

It has the three methods that I thought about (plus overloads), and with the exception of renaming Why() to GetAuthorizationInformation(), it is pretty much how I conceived it. That change was motivated by the desire to get standard API concepts. Why() isn't a really good method name, after all.

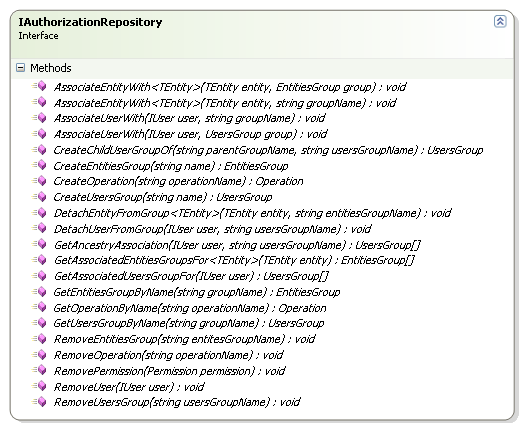

For building the security model, we have IAuthorizationRepository:

This is a much bigger interface, and it composes almost everything that you need to do in order to create the security model that you want. I am at the point where this is getting just a tad too big, another few methods and I'll need to break it in two, I think. I am not quite sure how to do this and keep it cohesive.

Wait, why do we need things like CreateOperation() in the first place? Can't we just create the operation and call Repository<Operation>.Save() ?

No, you can't, this interface is about more than just simple persistence. It is also handling logic related to keeping the security model. What do I mean by that? For example, CreateOperation("/Account/Edit") will actually generate two operations, "/Account" and "/Account/Edit", where the first is the parent of the second.

This interface also ensures that we are playing nicely with the second level cache, which is also important.

I did say that this interface is almost everything, what did I leave out?

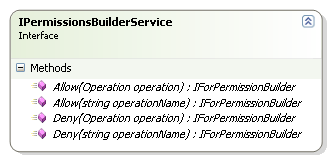

The actual building of the permissions, of course:

This is utilizing a fluent interface in order to define the permission. A typical definition would be:

permissionsBuilderService

.Allow("/Account/Edit")

.For(CurrentUser)

.OnEverything()

.DefaultLevel()

.Save();

permissionsBuilderService

.Deny("/Account/Edit")

.For("Administrators")

.OnEverything()

.DefaultLevel()

.Save();

This allow the current user to edit all accounts, and deny all members of the administrators group account editing permission.

And that sums all the interfaces that you have to deal with in order to work with Rhino Security.

Next, the required extension points.

Let us assume that we have the following model:

- User

- n:m -> Blogs

- n:m -> Users

Given a user, how would you find all the users that are members of all the blogs that the user is a member of?

Turn out that NHibernate makes it very easy:

DetachedCriteria usersForSameBlog = DetachedCriteria.For<User>()

.Add(Expression.IdEq(userId))

.CreateCriteria("Blogs")

.CreateCriteria("Users", "user")

.SetProjection(Projections.Id());

session.CreateCriteria(typeof(User))

.Add(Subqueries.PropertyIn("id", usersForSameBlog))

.List();

And the resulting SQL is:

SELECT this_.Id AS Id5_0_,

this_.Password AS Password5_0_,

this_.Username AS Username5_0_,

this_.Email AS Email5_0_,

this_.CreatedAt AS CreatedAt5_0_,

this_.Bio AS Bio5_0_

FROM Users this_

WHERE this_.Id IN (SELECT this_0_.Id AS y0_

FROM Users this_0_

INNER JOIN UsersBlogs blogs4_

ON this_0_.Id = blogs4_.UserId

INNER JOIN Blogs blog1_

ON blogs4_.BlogId = blog1_.Id

INNER JOIN UsersBlogs users6_

ON blog1_.Id = users6_.BlogId

INNER JOIN Users user2_

ON users6_.UserId = user2_.Id

WHERE this_0_.Id = @p0)

I have a fairly strong opinions about the way I build software, and I rarely want to compromise on them. When I want to build good software, I tend to do this with the hindsight of what is not working. As such, I tend to be... demanding from the tools that I use.

What follows are a list of commits logs that I can directly correlate to requirements from Rhino Security. All of them are from the last week or so.

NHiberante

- Applying patch (with modifications) from Jesse Napier, to support unlocking collections from the cache.

- Adding tests to 2nd level cache.

- Applying patch from Roger Kratz, performance improvements on Guid types.

- Fixing javaism in dialect method. Supporting subselects and limits in SQLite

- Adding supporting for paging sub queries.

- Need to handle the generic version of dictionaries as well.

- Override values in source if they already exist when copying values from dictionary

- Adding the ability to specify a where parameter on the table generator, which allows to use a single table for all the entities.

- Fixing bug that occurs when loading two many to many collection eagerly from the same table, where one of them is null.

Castle

- Fixing the build, will not add an interceptor twice when it was already added by a facility

- Generic components will take their lifecycle / interceptors from the parent generic handler instead of the currently resolving handler.

- Adding ModelValidated event, to allow external frameworks to modify the model before the HBM is generated.

- We shouldn't override the original exception stack

Rhino Tools

- Adding tests for With.QueryCache(), making sure that With.QueryCache() can be entered recursively. Increased timeout of AsyncBulkInsertAppenderTestFixture so it can actually run on my pitiful laptop.

- Adding support for INHibernateInitializationAware in ARUnitOfWorkTestContext

- Adding error handling for AllAssemblies. Adding a way to execute an IConfigurationRunner instance that was pre-compiled.

- Will not eager load assemblies any more, cause too many problems with missing references that are still valid to run

Without those modifications, I would probably have been able to build the solution I wanted, but it would have to work around those issues. By having control the entire breadth and width or the stack, I can make sure that my solution is ideally suited to what I think is the best approach. As an aside, it turn out that other people tend to benefit from that.