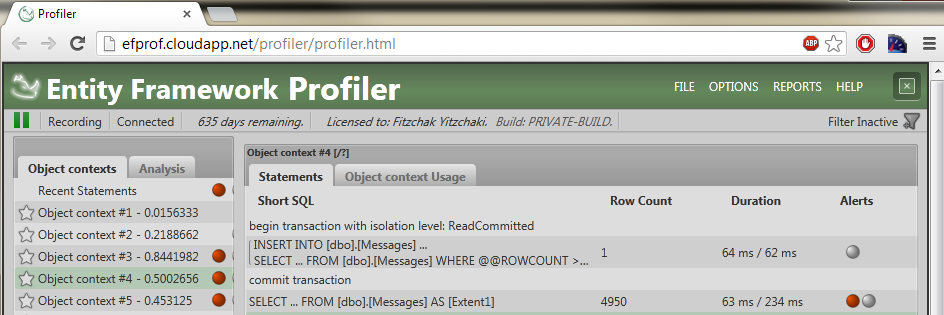

I’m not talking about this much anymore, but alongside RavenDB, my company produces a set of tools to help you work with OR/M (object relational mappers) such as NHibernate or Entity Framework as well as tracking what is going on with Cosmos DB.

The profilers are implemented as two separate components. We have the Appender, which runs inside the profiled process, and the Profiler, which is a WPF application that analyzes and shows you the results of the profiling. For the profilers, all the execution is done on the users’ machine.

We have crash reporting enabled and we are diligent in fixing any and all errors from the field. We recently ran into a whole spate of errors, looking something like this:

System.NullReferenceException: Object reference not set to an instance of an object. at System.Windows.Controls.VirtualizingStackPanel.UpdateExtent(Boolean areItemChangesLocal) at System.Windows.Controls.VirtualizingStackPanel.ShouldItemsChangeAffectLayoutCore(Boolean areItemChangesLocal, ItemsChangedEventArgs args) at System.Windows.Controls.VirtualizingPanel.OnItemsChangedInternal(Object sender, ItemsChangedEventArgs args) at System.Windows.Controls.Panel.OnItemsChanged(Object sender, ItemsChangedEventArgs args) at System.Windows.Controls.ItemContainerGenerator.OnItemAdded(Object item, Int32 index, NotifyCollectionChangedEventArgs collectionChangedArgs) at System.Windows.Controls.ItemContainerGenerator.OnCollectionChanged(Object sender, NotifyCollectionChangedEventArgs args) at System.Windows.WeakEventManager.ListenerList`1.DeliverEvent(Object sender, EventArgs e, Type managerType) at System.Windows.WeakEventManager.DeliverEvent(Object sender, EventArgs args) at System.Collections.Specialized.NotifyCollectionChangedEventHandler.Invoke(Object sender, NotifyCollectionChangedEventArgs e) at System.Windows.Data.CollectionView.OnCollectionChanged(NotifyCollectionChangedEventArgs args) at System.Windows.WeakEventManager.ListenerList`1.DeliverEvent(Object sender, EventArgs e, Type managerType) at System.Windows.WeakEventManager.DeliverEvent(Object sender, EventArgs args) at System.Windows.Data.CollectionView.OnCollectionChanged(NotifyCollectionChangedEventArgs args) at System.Windows.Data.ListCollectionView.ProcessCollectionChangedWithAdjustedIndex(NotifyCollectionChangedEventArgs args, Int32 adjustedOldIndex, Int32 adjustedNewIndex) at System.Collections.Specialized.NotifyCollectionChangedEventHandler.Invoke(Object sender, NotifyCollectionChangedEventArgs e) at System.Collections.ObjectModel.ObservableCollection`1.OnCollectionChanged(NotifyCollectionChangedEventArgs e) at Caliburn.Micro.BindableCollection`1.OnCollectionChanged(NotifyCollectionChangedEventArgs e) at System.Collections.ObjectModel.ObservableCollection`1.InsertItem(Int32 index, T item) at Caliburn.Micro.BindableCollection`1.OnUIThread(Action action) at HibernatingRhinos.Profiler.Client.Sessions.SessionListModel.Add(SessionModel model)

And here is the relevant code:

This is called from a timer thread (not from the UI) one, and the Items collection in this case is a BindableCollection<T>.

The error is happening deep in the guts of WPF and it seems like it has been triggered by some recent Windows update. Here is the “fix” for this issue:

Basically, don’t report this error, and continue executing normally (the next UI operation will fix the UI state, usually within < 200 ms).

This is the right call in terms of development time and effort, but I got to say, this makes me feel quite uncomfortable to see a change like that.