It may not get enough attention, but we have been working on the profilers as well during the past few months.

TLDR; You can get the next generation of NHibernate Profiler and Entity Framework Profiler now, lots of goodies to look at!

I’m sure that a lot of people would be thrilled to hear that we dropped Silverlight in favor of going back to WPF UI. The idea was that we would be able to deploy anywhere, including in production. But Silverlight just made things harder all around, and customers didn’t like the production profiling mode.

Production Profiling

We have changed how we profile in production. You can now make the following call in your code:

NHibernateProfiler.InitializeForProduction(port, password);

And then connect to your production system:

At which point you can profile what is going on in your production system safely and easily. The traffic between your production server and the profiler is SSL encrypted.

NHibernate 4.x and Entity Framework vNext support

The profilers now support the latest version of NHibernate and Entity Framework. That include profiling async operations, better suitability for modern apps, and more.

New SQL Paging Syntax

We are now properly support SQL Server paging syntax:

select * from Users

order by Name

offset 0 /* @p0 */ rows fetch next 250 /* @p1 */ rows only

This is great for NHibernate users, who finally can have a sane paging syntax as well as beautiful queries in the profiler.

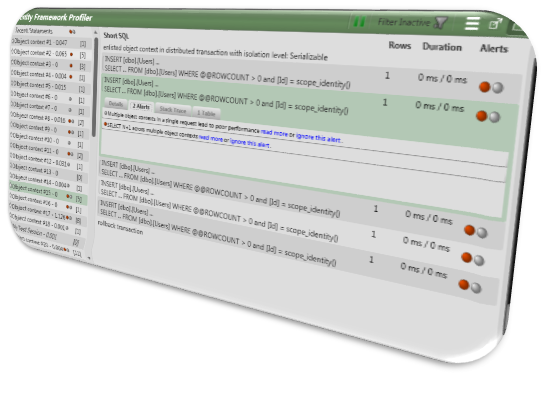

At a glance view

A lot of the time, you don’t want the profiler to be front and center, you want to just run it and have it there to glance at once in a while. The new compact view gives you just that:

You can park it at some point in your screen and work normally, glancing to see if it found anything. This is much less distracting than the full profiler for normal operations.

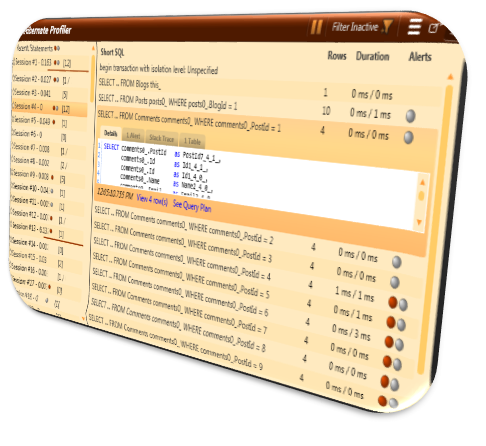

Scopes and groups

When we started working on the profilers, we followed the “one session per request” rule, and that was pretty good. But a lot of people, especially in the Entity Framework group are using multiple sessions or data contexts in a single request, but they still want to see the ability to see the operations in a request at a glance. We are now allowing you to group things, like this:

By default, we use the current request to group things, but we also give you the ability to define your own scopes. So if you are profiling NServiceBus application, you can set the scope as your message handling by setting ProfilerIntegration.GetCurrentScopeName or explicitly calling ProfilerIntegration.StartScope whenever you want.

Customized profiling

You can now surface troublesome issues directly from your code. If you have an issue with a query, you can mark it for attention using CustomQueryReporting .ReportError() that would flag it in the UI for further investigation.

You can also just mark interesting pieces in the UI without an error, like so:

using (var db = new Entities(conStr))

{

var post1 = db.Posts.FirstOrDefault();

using (ProfilerIntegration.StarStatements("Blue"))

{

var post2 = db.Posts.FirstOrDefault();

}

var post3 = db.Posts.FirstOrDefault();

ProfilerIntegration.StarStatements();

var post4 = db.Posts.FirstOrDefault();

ProfilerIntegration.StarStatementsClear();

var post5 = db.Posts.FirstOrDefault();

}

Which will result in:

Disabling profiler from configuration

You can now disable the profiler by setting:

<add key="HibernatingRhinos.Profiler.Appender.NHibernate" value="Disabled" />

This will avoid initializing the profiler, obviously. The intent is that you can setup production profiling, disable it by default, and enable it selectively if you need to actually figure things out.

Odds & ends

We move to WebActivatorEx from the deprecated WebActivator, added xml file for the appender, fixed a whole bunch of small bugs, the most important among them is:

![clip_image001[4] clip_image001[4]](http://ayende.com/blog/Images/Windows-Live-Writer/The-profilers-are-back_B25A/clip_image001%5B4%5D_thumb.png)

Linq to SQL, Hibernate and LLBLGen Profilers, RIP

You might have noticed that I talked only about NHibernate and Entity Framework Profilers. The sales for the rests weren’t what we hoped they would be, and we are no longer going to sale them.

Go get them, there is a new release discount

You can get the NHibernate Profiler and Entity Framework Profiler for a 15% discount for the next two weeks.

I’m really happy to announce that we have just release a brand new version of NHibernate Profiler and Entity Framework Profiler.

I’m really happy to announce that we have just release a brand new version of NHibernate Profiler and Entity Framework Profiler.

![clip_image001[4] clip_image001[4]](http://ayende.com/blog/Images/Windows-Live-Writer/The-profilers-are-back_B25A/clip_image001%5B4%5D_thumb.png)