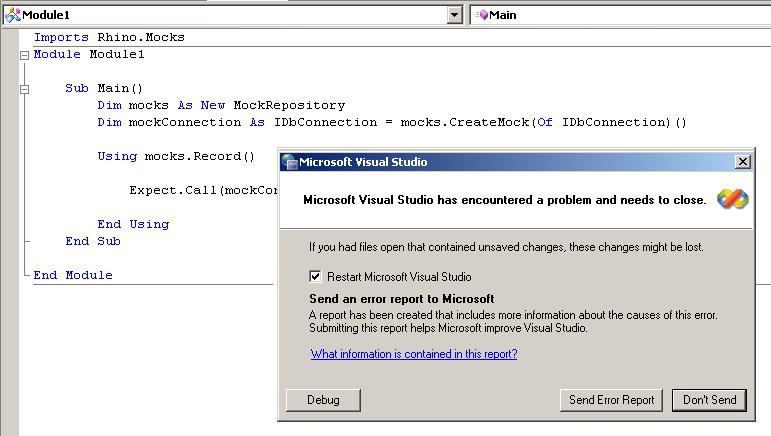

It looks like there is some confusion about the way Rhino Mocks 3.3 Expect.Call support for void method works.

Let us examine this support for an instance, shall we? Here is all the code for this feature:

public delegate void Action(); public static IMethodOptions<Action> Call(Action actionToExecute) { if (actionToExecute == null) throw new ArgumentNullException("actionToExecute", "The action to execute cannot be null"); actionToExecute(); return LastCall.GetOptions<Action>(); }

As you can see, this is simply a method that accept a no args delegate, execute it, and then return the LastCall options. It is syntactic sugar over the usual "call void method and then call LastCall). The important concept here is to realize that we are using the C# * anonymous delegates to get things done.

Let us see how it works?

[Test] public void InitCustomerRepository_ShouldChangeToCustomerDatabase() { IDbConnection mockConnection = mocks.CreateMock<IDbConnection>(); Expect.Call(delegate { mockConnection.ChangeDatabase("myCustomer"); }); mocks.ReplayAll(); RepositoryFactory repositoryFactory = new RepositoryFactory(mockConnection); repositoryFactory.InitCustomerRepository("myCustomer"); mocks.VerifyAll(); }

This is how we handle void methods that have parameters, but as it turn out, we can do better for void methods with no parameters:

[Test] public void StartUnitOfWork_ShouldOpenConnectionAndTransaction() { IDbConnection mockConnection = mocks.CreateMock<IDbConnection>(); Expect.Call(mockConnection.Open); // void method call Expect.Call(mockConnection.BeginTransaction()); // normal Expect call mocks.ReplayAll(); RepositoryFactory repositoryFactory = new RepositoryFactory(mockConnection); repositoryFactory.StartUnitOfWork("myCustomer"); mocks.VerifyAll(); }

Notice the second line, we are not calling the mockConnection.Open() method, we are using C#'s ability to infer delegates, which means that the code actually looks like:

Expect.Call(new Action(mockConnection.Open));

Which will of course be automatically executed by the Call(Action) method.

I hope that this will make it easier to understand how to use this new feature.

Happy Mocking,

~ayende

* Sorry VB guys, this is not a VB feature, but I am not going to rewrite VBC to support this :-) You will be able to take advantage of this in VB9, though, so rejoice.