One of the things that you keep hearing about Microsoft products is how much time and effort is dedicated to ensuring Backward Compatibility. I have had a lot of arguments with Microsoft people about certain design decisions that they have made, and usually the argument ended up with “We have to do it this was to ensure Backward Compatibility”.

That sucked, but at least I could sleep soundly, knowing that if I had software running on version X of Microsoft, I could at least be pretty sure that it would work in X+1.

Until Entity Framework 4.1 Update 1 shipped, that is. Frans Bouma has done a great job in describing what the problem actually is, including going all the way and actually submitting a patch with the code required to fix this issue.

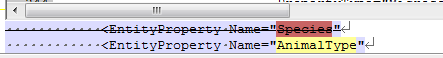

But basically, starting with Entity Framework 4.1 Update 1 (the issue also appears in Entity Framework 4.2 CTP, but I don’t care about this much now), you can’t use generic providers with Entity Framework. Just to explain, generic providers is pretty much the one way that you can integrate with Entity Framework if you want to write a profiler or a cacher or a… you get the point.

Looking at the code, it is pretty obvious that this is not intentional, but just someone deciding to implement a method without considering the full ramifications. When I found out about the bug, I tried figuring out a way to resolve it, but the only work around would require me to provide a different assembly for each provider that I wanted to support (and there are dozens that we support on EF 4.0 and EF 3.5 right now).

We have currently implemented a work around for SQL Server only, but if you want to use Entity Framework Profiler with Entity Framework 4.1 Update 1 and a database other than SQL Server, we would have to create a special build for your scenario, rather than have you use the generic provider method, which have worked so far.

Remember the beginning of this post? How I said that Backward Compatibility is something that Microsoft is taking so seriously?

Naturally I felt that this (a bug that impacts anyone who extends Entity Framework in such a drastic way) is an important issue to raise with Microsoft. So I contacted the team with my finding, and the response that I got was basically: Use the old version if you want this supported.

What I didn’t get was:

- Addressing the issue of a breaking change of this magnitude that isn’t even on a major release, it is an update to a minor release.

- Whatever they are even going to fix it, and when this is going to be out.

- Whatever the next version (4.2 CTP also have this bug) is going to carry on this issue or not.

I find this unacceptable. The fact that there is a problem with a CTP is annoying, but not that important. The fact that a fully release package has this backward compatibility issue is horrible.

What makes it even worse is the fact that this is an update to a minor version, people excepts this to be a safe upgrade, not something that requires full testing. And anyone who is going to be running Update-Package in VS is going to hit this problem, and Update-Package is something that people do very often. And suddenly, Entity Framework Profiler can no longer work.

Considering the costs that the entire .NET community has to bear in order for Microsoft to preserve backward compatibility, I am deeply disappointed that when I actually need this backward compatibility.

This is from the same guys that refused (for five years!) to fix this bug:

new System.ComponentModel.Int32Converter().IsValid("yellow") == true

Because, and I quote:

We do not have the luxury of making that risky assumption that people will not be affected by the potential change. I know this can be frustrating for you as it is for us. Thanks for your understanding in this matter.

Let me put this to you in perspective, anyone who is using EF Prof is likely to (almost by chance) to get hit by that. When this happens, our only option is to tell them to switch back a version?

That makes us look very bad, regardless of what is the actual reason for that. That means that I am having to undermine my users' trust in my product. "He isn't supporting 4.1, and he might not support 4.2, so we probably can't buy his product, because we want to have the newest version".

This is very upsetting. Not so much about the actual bug, those happen, and I can easily imagine the guy writing the code making assumptions that turn out to be false. Heavens know that I have done the same many times before. I don’t even worry too much about this having escaped the famous Microsoft Testing Cycle.

What (to be frank) pisses me off is that the response that we got from Microsoft for this was that they aren’t going to fix this. That the only choice that I have it to tell people to downgrade if they want to use my product (with all the implications that has for my product).

![]()

![image_thumb[1] image_thumb[1]](http://ayende.com/Blog/images/ayende_com/Blog/WindowsLiveWriter/LightSwitchonthewire_8EF2/image_thumb%5B1%5D_f8593434-3dd0-471a-99bf-9fb9149ff5d8.png)