I got a couple of complaints about this, and I think that this is a really important issue. Using NH Prof slow down the profiled application. That is to be expected, since we require the application to do more work, but it is not as good as we can hope it would be.

I got a couple of complaints about this, and I think that this is a really important issue. Using NH Prof slow down the profiled application. That is to be expected, since we require the application to do more work, but it is not as good as we can hope it would be.

Here is the test scenario, running against the Northwind database (thanks Davy for supplying it):

class Program

{

static void Main(string[] args)

{

var sp = Stopwatch.StartNew();

HibernatingRhinos.NHibernate.Profiler.Appender.NHibernateProfiler.Initialize();

ISessionFactory sessionFactory = CreateSessionFactory();

using (var session = sessionFactory.OpenSession())

{

var products = session.CreateCriteria(typeof(Product))

.SetCacheable(true).List<Product>();

}

using (var session = sessionFactory.OpenSession())

{

var products = session.CreateCriteria(typeof(Product))

.SetCacheable(true).List<Product>();

}

using (var session = sessionFactory.OpenSession())

{

var products = session.CreateCriteria(typeof(Product))

.SetCacheable(true).List<Product>();

}

using (var session = sessionFactory.OpenSession())

{

var products = session.CreateCriteria(typeof(Product))

.SetCacheable(true).List<Product>();

}

using (var session = sessionFactory.OpenSession())

{

var products = session.CreateCriteria(typeof(Product))

.SetCacheable(true).List<Product>();

}

using (var session = sessionFactory.OpenSession())

{

var products = session.CreateCriteria(typeof(Product))

.SetCacheable(true).List<Product>();

}

Console.WriteLine(sp.ElapsedMilliseconds);

}

private static ISessionFactory CreateSessionFactory()

{

Configuration configuration = new Configuration()

.Configure()

.AddAssembly("nhprof_experiment");

return configuration.BuildSessionFactory();

}

}

With NH Prof active, this takes: 13 - 16 seconds to run.

Without NH Prof running, this takes: 12 seconds to run.

The reason for that difference is probably that NH Prof is doing some work when new events come in, so it steals some CPU time from the other process.

Disabling NH Prof (commenting the NHibernateProfiler.Initialize()), this takes 4 - 6 seconds to run. This is a huge difference.

I decided to see whatever this is something that I can actually do something about, and turned the NHibernateAppender into a no op. So the next run would measure just the time that it takes to NHibernate to generate the event that I care about, and not whatever processing I am doing on the profiled application side.

The result both surprised and thrilled me: 4.8 seconds!

Why was I happy about this?

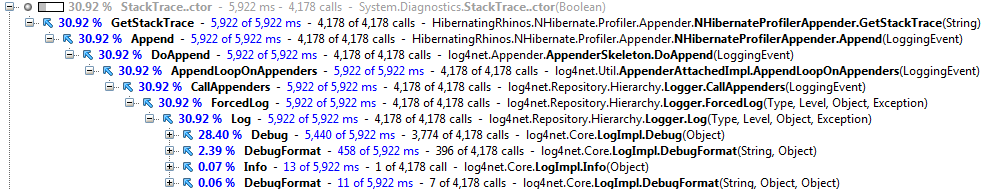

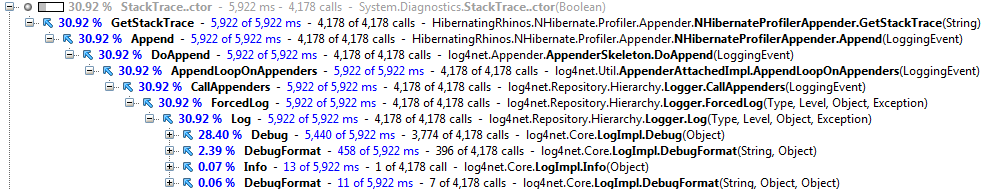

Because it meant that I was actually at fault here. And if I was actually at fault, it means that I can fix things. I pulled up the trusty dotTrace Profiler, and quickly identified a hot spot:

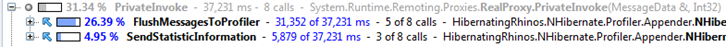

Well, in this case, you need to understand a bit about how NH Prof get the data from the profiled application. We gather the event stream and batch send it to the actual profiler. Those sends are RPC calls, and it is expected that they would be slow. Except, they are not happening in the same thread as the actual application, so they should have little effect on the actual performance of the test scenario that we have above.

I decided to try it out again, this time, we will change FlushMEssagesToProfiler and SendStatsticInformation to no ops, and see what happens.

Running the application again, we got runtime of 13 seconds. That confirmed my original suspicion, that while those actions takes a long time, they do not contribute to the actual perf problem in the actual code.

I run the code again under the profiler, looking deeper. That led me to this discovery:

Well, I knew that stack trace was slow, but I sincerely thought that I was doing better job in reducing the number of times that I had to call it. Calling it 4,178 times is obviously wrong.

We track the stack trace for the following event sources:

- NHibernate.SQL

- NHibernate.Event.Default.DefaultLoadEventListener

- NHibernate.Cache.StandardQueryCache

- NHibernate.Transaction.AdoTransaction

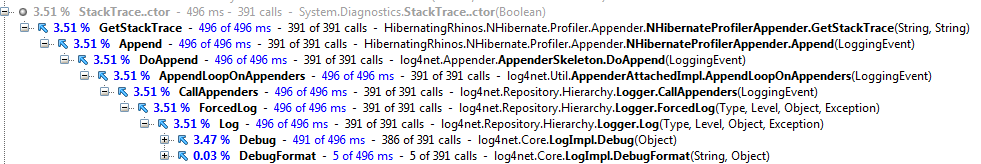

The problem is that some things, like DefaultLoadEventListener, are generating a lot of events that I don't really care about. I started approaching the problem by applying filters, so I would only ever create the stack trace for the events I truly care about. That brought the cost down significantly, so now it took 6 - 7 seconds. We shaved 5 - 10 seconds of the total running time, awesome.

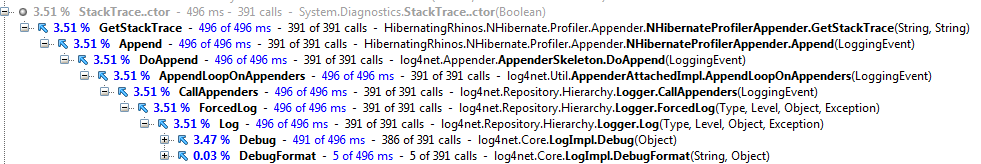

But I think that we can still do better than that. Let us see what dotTrace is saying:

dotTrace is saying that we now reduced the time this takes from 31% to 3.5%. We have better things to do than to look at this piece of code again, then.

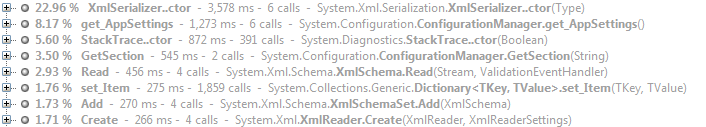

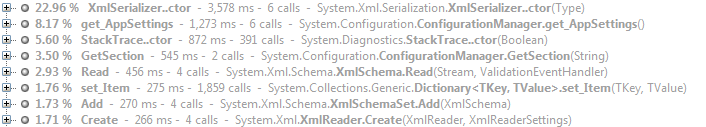

Let us focus on something that will give us more benefit, then:

This is actually the initial RPC call between the appender and the profiler. It establish the TCP connection, among other things, so it takes a while to process. Almost 0.7 seconds is a lot of time. I changed that code to run on a background thread, further improving the startup time of the application.

Time to execute the code now? 5.8 seconds.

Time to go to dotTrace again, I think.

And we can see that what dominate the actual time for the test is the startup concerns of NHibernate. But I think that in a real scenario, the time for generating a stack trace would again become a meaningful for performance.

There are probably additional things that I can do to optimize this, but I think that this is a great step. To remind you, the initial costs were:

- No profiler: 4 - 6 seconds

- With profiler: 13 - 16 seconds

After the changes, we are with: 5 - 7 seconds, which I consider entirely reasonable.

And for a few hours of performance tuning, reducing the time by 8-9 seconds and by over 60%, I think I did good.

The code pass all the tests and was committed, so in about ten minutes, you would be able to download it from the NH Prof site.

I got a couple of complaints about this, and I think that this is a really important issue. Using NH Prof slow down the profiled application. That is to be expected, since we require the application to do more work, but it is not as good as we can hope it would be.

I got a couple of complaints about this, and I think that this is a really important issue. Using NH Prof slow down the profiled application. That is to be expected, since we require the application to do more work, but it is not as good as we can hope it would be.