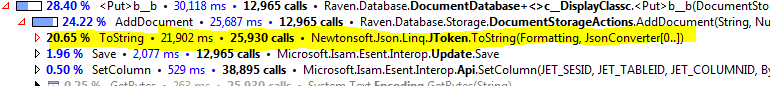

Optimizing “expensive” calls

Take a look at the following code:

It is obvious where we need to optimize, right?

Except… each call here takes about 0.8 millisecond. Yes, we could probably optimize this further, but the question is, would it be worth it?

Given a sub millisecond performance, and given that trying to implement a different serialization format would be expensive operation, I think that there just isn’t enough justification to do so.

Comments

How complex is the data? If you do care about this, I'm pretty confident I could get you some noticeable performance increase without any change to your object model, using the (not fully released, but pretty stable) protobuf-net "v2" build.

In particular, this:

has a metadata abstraction layer, so you don't need to decorate your model with attributes if you want to keep it unaware of serialization

has full ILGenerator pre-compilation (even to a dll if you really want) for performance

(and of course the usual protobuf advantages of not needing to do as much UTF encoding or string matching)

I've got some examples where I have to do large loops, because the measurement is in the low micro-seconds.

Marc :-)

The data is any arbitrary JSON document.

Fair enough. That won't help at all, then ;-p (feel free to delete the comment if you want to avoid any unhelpful distraction)

I do wonder, though, if I should try a second project to take my existing IL-generator and change the leaf-nodes to handle xml / json / something else. Could be interesting ;-p

I'm a strong believer in 'selectively' optimizing where it makes sense. I actually think it does make sense to aggressively optimize serialization as it is ultimately used on every request so every optimization you can make improves the entire performance of your application as a whole. Personally I think application performance / perceived performance is the primary goal of all successful internet software companies. For a good example of an optimized HTML5 site view the source and traffic of yahoo's new search page (see: inline images :):

http://search.yahoo.com/

Speed is the main reason why I'm not using JSON serialization for serializing POCO's. I don't think there is anything inherently wrong with the format, I just haven't found a JSON library with good performance. 0.8ms seconds doesn't seem like here much so maybe its not worth it for this case but bigger datasets mean longer times and I'm used to having entire web service requests finish in > 1ms.

Using the Northwind dataset, I've benchmarked all leading serialization routines I could find in .NET here:

www.servicestack.net/.../...-times.2010-02-06.html

@Marc Gravell's protobuf-net binary serialization is the clear leader here (makes every other binary serializer redundant) while I do ok with the leading text-serializer.

Yes please do! I don't think you should worry about XML as MS's implementation is actually pretty good, and well if you use XML you're going to be more concerned with interoperability than performance. But there is a big potential for perf gains in the JSON-space - all .NET ajax apps thank you in advance for your efforts :)

Marc,

That might be nice, but the reason issue here is that we have a Json document being saved into a file, nothing else.

There isn't really a place for IL gen here. It isn't an object to json

I mistakenly assumed that this was doing a serialization step.

I'll get my coat.

Whenever looking at the results of a profiler run, I remind myself that unnecessary calls are as important as slow calls, and often moreso once the obvious slow calls have been addressed. With that in mind, does AddDocument need to call JToken.ToString twice?

Jeremy,

Yes, it calls that on two different objects

I was sure you had that covered but just had to check. ;)

There isn't any reflection involved here, just simple iteration over collections of known objects and then writing values as JSON text.

I think it would be possible to improve performance here a little: under the covers the JSON is being written by a class called JsonTextWriter. Like any good public API JsonTextWriter can write to any TextWriter, checks for errors and updates some public properties with state. Since the contents of JObject et al are already guaranteed to be valid and will always output a string it could have its own internal writer which optimizes to be written to a string and fore-gos error checking and state.

If performance is important that is an idea of something you could look at. I haven't done it myself because I think sub 1ms is plenty fast enough for average use already and in my opinion more important features in a serializer, but you obviously have different needs. Happy to include the source in future releases if you do write something! :)

~J

Can you serialize many objects at once with threads (keep some 'serialize' threads running looking for work).

May be overkill :)

Very interesting NewtonSoft.Json is faster than BinaryFormatter.

Probably you need to process the json only because you have to make sure ID and Version attributes are set. If so, you could just copy the doc from json reader to a json writer, adding id and version if necessary.

What tool do you use, for creating the "report" with the expensive calls?

Thomas,

This is dotTrace, a wonderful tool from JetBrains

Thank you

BSON,

No, I didn't know about that, thanks, I'll try it.

Comment preview