Can you guess what this picture is?

This is a partial image from a trace from a simple ASPX page, just look at the amount of controls that are involved in producing a page. Now go figure out an issue with page lifecycles of siblings please.

Can you guess what this picture is?

This is a partial image from a trace from a simple ASPX page, just look at the amount of controls that are involved in producing a page. Now go figure out an issue with page lifecycles of siblings please.

I just want to know, whose bright idea was it to allow anonymous namespace in XML but not in XPath?

There are two gotchas associated with working with large text fields with NHibernate.

The first is that you must specify type="StringClob" (or ColumnType = "StringClob" in Active Record), if you are using SQL Server, since NHibernate needs to set the Size property on the parameters.

The second is that if you plan of letting NHibernate generate your schema, you need to specify sql-type="NTEXT" (or SqlType="NTEXT" in Active Record), otherwise NHibernate will generate an NVARCHAR field.

This is more of a public service announcement, Query Express Plus is a tiny, stand alone GUI for Sql Server (and other DBs). It is great for working on the server, where you can't install SSMS (or want to).

It does exactly what I want it to do and I use it quite a bit to check on my servers' behavior, put it on your keychain!

Do they mean this?

In my last post, I show how to use the Ajax Generators, but I didn't really explain why it is good to learn yet another way to do Ajax. Here is the example that I used:

This will send the following to the client:

(Replace gridContent with a lot of HTML, of course). Okay, so I get nicer error handling if I have an error (always a good thing), but I don't see the value of it. Let us consider the case where we don't have something as simple as straight rendering to the client, shall we?

A good example will be in a shopping cart, if the total price of the items reach above a certain threshold, the user should get free shipping. Now that we have a conditional, the code isn't this simple to write. Add about 15 lines of JS for each condition, and you are in a world of hurt.

This is where the generators come real handy, you aren't manipulating text, but objects, which means that you get to take advantage of advance programming language constructs like conditionals and looping. Let us see how we can implement the shopping cart functionality. We will start with the logic, which in this case is sitting in the controller (probably not a good idea for non-demo scenarios):

private string[] products = { "Milk", "Honey", "Pie" };

public void Index()

{

PropertyBag["cart"] = Cart;

PropertyBag["products"] = products;

}

public void AddItem(int id)

{

PropertyBag["alreadyHadfreeShipping"] = Cart.Count > 4;

Cart.Add(products[id]);

PropertyBag["produdct"] = products[id];

PropertyBag["freeShipping"] = Cart.Count > 4;

RenderView("AddItem.brailjs");

}

public void ClearAllItems()

{

PropertyBag["alreadyHadfreeShipping"] = Cart.Count > 4;

InitCart();

RenderView("ClearAllItems.brailjs");

}

Cart is a property that exposed an ArrayList saved to the user session. You can see that we are passing the decisions to the views, to act upon. There is a business rules that says that if you buy more than 4 items you get free shipping, and that is what is going on here. No dealing with the UI at all.

Now, let us see the views, we will start with the main one, index.brail

<?brail import Boo.Lang.Builtins ?>

<h2>Demo shopping cart:</h2>

<ul>

<?brail for i in range(products.Length):?>

<li>

${ajax.LinkToRemote(products[i],'addItem.rails?id='+i,{})}

</li>

<?brail end ?>

</ul>

<div id="freeShipping" style="display: none;">

<b>You are elegible for free shipping!</b>

</div>

<h2>Purchased Products:</h2>

<p>

${ajax.LinkToRemote('Clear','clearAllItems.rails',{})}

</p>

<ul id="products">

<?brail for product in cart: ?>

<li>${product}</li>

<?brail end ?>

</ul>

The first line shows an interesting trick, by default Brail removes the builtin namespace, because common names such as list and date exist there. Here, I want to use the range function, so I just import it and continue as usual. Beyond that, there is nNothing particulary interesting about this view, I think.

Let us see the addItem.brailjs file first, it handles updating the page to reflect the new item in the cart:

page.InsertHtml('bottom', 'products',"<li>${produdct}</li>")

if freeShipping:

page.Show('freeShipping')

if not alreadyHadfreeShipping:

page.VisualEffect('Highlight','freeShipping')

end

end

As you can see, it contains conditional logic, but this logic is strictly UI focused. If the user is applicable for free shipping show it, if this is the first time, highlight the fact that they got a free shipping, so they will notice.

I would like to see similar functionality done the other way, but I do not think that I would care to write it...

Using generators gives you code that is highly maintainable in the future, and doesn't move business logic to the UI or UI logic to the business logic like often happen (by neccesaity) using other methods.

I am currently trying to convince a client that we really should use MonoRail for the project. After explaining the benefits and sending a couple of tutorials in his path, I had a few minutes to sit with him about it and we talked code:

<head>

<title>Exesto</title>

<link rel="stylesheet"

type="text/css"

href="/style.css"

media="screen, print" />

${Ajax.InstallScripts()}

${Scriptaculous.InstallScripts()}

</head>

His main objection at the moment is:

I need to work on my explaination skills...

I mentioned that MonoRail recently aquired Ajax Generators. The generators a are different from normal templates, because they do not generate html, but rather modify the page that was already rendered. It will be easier to exaplain with an example. Let us take the simple example of paging a grid without a full post back, shall we? By the way, this turned out to be a lot more focused at the WebForms than I intended, more on that later.

Here is the backend of this demo:

public void Index(bool isAjax)

{

PropertyBag["subjects"] = PaginationHelper.CreateCachedPagination(

this, Action,15,delegate

{

return new List<Subject>(Repository<Subject>.FindAll()).ToArray();

});

if(isAjax)

RenderView("index.brailjs");

}

Simple, get the items from the database and store it in the cache, as well as passing it to the view. For now, please ignore the last two lines...

Now, let us a look at our view, shall we? There is a layout that I will not touch now (if you don't understand MonoRail terms, think about it as a MasterPage), but let us focus on the action's view index.brail:

<script type='text/javascript'>

function error(e)

{

alert(e);

}

function paging(index)

{

var url = '/faq/index.rails';

var pars = 'page=' + index +'&isAjax=true';

new Ajax.Request(url,{method: 'get', evalScripts: true, parameters: pars, onException: error});

}

</script>

<h2>Subjects for questions:</h2>

<div id='paginatedGrid'>

<?brail OutputSubView('grid') ?>

</div>

What we have here is merely a bit of javascript and a div to put the gird in. We also use OutputSubView for the grid (again, if WebForms terms, this is something like a UserControl). The most complex part is the grid.brail view itself:

<?brail

component GridComponent, {'source':subjects}:

section header:

?>

<th id='header'>Id</th>

<th id='header'>Name</th>

<th id='header'>Browse</th>

<?brail

end

section item:

?>

<tr id='item'>

<td>${item.Id}</td>

<td>${item.Name}</td>

<td>${ HtmlHelper.LinkTo('Browse','faq','showQuestions', item.Id) }</td>

</tr>

<?brail

end

section alternateItem:

?>

<tr id='alternateItem'>

<td>${item.Id}</td>

<td>${item.Name}</td>

<td>${ HtmlHelper.LinkTo('Browse','faq','showQuestions', item.Id) }</td>

</tr>

<?brail

end

section link:

?>

<a href='/faq/index.rails?page=${pageIndex}'

onclick='paging(${pageIndex});return false;'>${title}</a>

<?brail

end

end

?>

The GridComponent is somewhere in the middle between GridView and Repeater, since it can do almost everything on its own, but let you override (for isntnace, alternateItem) parts of the rendering in place. Please pay some attention to the last section, the link. This is a pagination link that can be used to override the default behavior of moving to the next page. I am overriding it with a

Now that we have established the page, we can play with it a bit, and see that it is working great. Except that there is still the last piece of the puzzle, paging without refreshing the full page.

Go check the javascript in index.brail, notice that it is making an Ajax request to /faq/index.rails ? And that it is passing isAjax=true? No go and check the Index() method, and look at the last two lines. If this is an Ajax request, we use a different view for the output, index.brailjs;

page.ReplaceHtml('paginatedGrid', {@partial : 'faq/grid' })

page.VisualEffect('Highlight', 'paginatedGrid')

Now, what is going on here... this certianly doesn't look like any ajax framework I have ever seen...

Remember that I spoke about the ajax generators? This is it. You may think about is as a small DSL for generating the javascript to modify the page. What we have here is a call to ReplaceHtml, which will replace the content of the paginatedGrid element with something else, usually a string. But, in this case, we pass in a @partial (@symbol is identical to :symbol in Ruby, but this is a temporary syntax at the moment, I want to get rid of the {} ), which means that we ask to replace the element with the results of running the grid template. The second line is just to provide some feedback to the user that something has changed. So, in essense, it took 4 lines of code to

But why invent a whole new syntax just to use ajax? Isn't Javascript enough? More on that next post...

There is a running joke in the office about one shady guy that digs really deep into an issue. It goes like this;

Dev: I just got a NullReferenceException!

Shady Guy: Give me a second tol install the kernel debugger and we will fix it in no time.

The problem is that at the moment, I feel like I am the butt of the joke right now. For the last [undisclosed for shame reasons] hours I have been struggling with an Ajax issue that I couldn't figure out. As I mentioned before, I have very little experiance with Ajax. In light of recent additions to MonoRail, I decided that it is time to dedicate some cycles in that direction. Hammett has already ready added (awesome) support for MonoRail, and I have extended that a bit in Brail (more on that later), which deserve at least a few demos to show around.

Anyway, I already said that I had a problem, a big one. My scripts weren't being evaluated properly when they returned from the server. I debugged the server, I debugged brail, I debugged the view, at one point I even considered debugging firefox. I had enough trace tools running to suffice a small NSA lab, and enough javascript documentation to hurt the eye.

Only at the end, I started debugging the javascript library itself... after which I discovered that it will only evaluate the scripts if they were sent with "text/javascript" content-type, which I didn't use.

Damn, I hate that feeling of missing the Oh So Obvious!

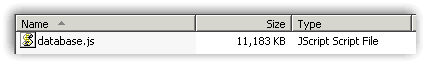

I was asked if I could interface to an existing database, so I took a look*:

Somehow I do not think that I can make it interface with NHibernate.

* To be fair, this is a client side ajax solution (think CD-ROM, not web), so this is actually a clever idea for the problem they were trying to solve.

There are posts all the way to Jan 15, 2026