Raven.Munin

Raven.Munin is the actual implementation of a low level managed storage for RavenDB. I split it out of the RavenDB project because I intend to make use of it in additional projects.

Raven.Munin is the actual implementation of a low level managed storage for RavenDB. I split it out of the RavenDB project because I intend to make use of it in additional projects.

At its core, Munin provides high performance transactional, non relational, data store written completely in managed code. The main point in writing it was to support the managed storage in RavenDB, but it is going to be used for Raven MQ as well, and probably a bunch of other stuff as well. I’ll post about Raven MQ in the future, so don’t bother asking about it.

Let us look at a very simple API example. First, we need to define a database:

public class QueuesStorage : Database { public QueuesStorage(IPersistentSource persistentSource) : base(persistentSource) { Messages = Add(new Table(key => key["MsgId"], "Messages") { {"ByQueueName", key => key.Value<string>("QueueName")}, {"ByMsgId", key => new ComparableByteArray(key.Value<byte[]>("MsgId"))} }); Details = Add(new Table("Details")); } public Table Details { get; set; } public Table Messages { get; set; } }

This is a database with two tables, Messages and Details. The Messages table has a primary key of MsgId, and two secondary indexes by queue name and by message id. The Details table is sorted by the key itself.

It is important to understand one very important concept about Munin. Data stored in it is composed of two parts. The key and the data. It is easier to explain when you look at the API:

bool Remove(JToken key); Put(JToken key, byte[] value); ReadResult Read(JToken key); public class ReadResult { public int Size { get; set; } public long Position { get; set; } public JToken Key { get; set; } public Func<byte[]> Data { get; set; } }

Munin doesn’t really care about the data, it just saves it. But the key is important. In the Details table case, the table would be sorted by the full key. In the Messages table case, things are different. We use the lambda to extract the primary key from the key for each item, and we use additional lambdas to extract secondary indexes. Munin can build secondary indexes only from the key, not from the value. It is only the secondary indexes that allow range queries, the PK allow only direct access, which is why we define both the primary key and a secondary index on MsgId.

Let us see how we can save a new message:

public void Enqueue(string queue, byte[]data) { messages.Put(new JObject { {"MsgId", uuidGenerator.CreateSequentialUuid().ToByteArray()}, {"QueueName", queue}, }, data); }

And now we want to read it:

public Message Dequeue(Guid after) { var key = new JObject { { "MsgId", after.ToByteArray()} }; var result = messages["ByMsgId"].SkipAfter(key).FirstOrDefault(); if (result == null) return null; var readResult = messages.Read(result); return new Message { Id = new Guid(readResult.Key.Value<byte[]>("MsgId")), Queue = readResult.Key.Value<string>("Queue"), Data = readResult.Data(), }; }

We do a bunch of stuff here, we scan the secondary index for the appropriate value, then get it from the actual index, load it into a DTO and return it.

Transactions

Munin is fully transactional, and it follows an append only, MVCC, multi reader single writer mode. The previous methods are run in the context of:

using (queuesStroage.BeginTransaction()) { // use the storage queuesStroage.Commit(); }

Storage on disk

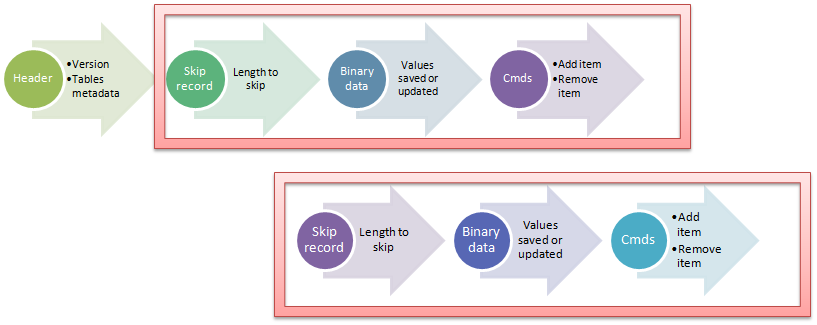

Munin can work with either a file or in memory (which makes unit testing it a breeze). It uses an append only model, so on the disk it looks like this:

Each of the red rectangle represent a separate transaction.

In memory data

You might have noted that we don’t keep any complex data structures on the disk. This is because all the actual indexing for the data is done in memory. The data on the disk is used solely for building the index in memory. Do note that the actual values are not held, only the keys. That means that search Munin indexes is lightning fast, since we never touch the disk for the search itself.

The data is actually held in an immutable binary tree, which gives us the ability to do MVCC reads, without any locking.

Compaction

Because Munin is using the append only model, it require periodic compaction. It does so automatically in RavenDB, waiting for periods of inactivity to do so.

Summary

Munin is a low level api, not something that you are likely to use directly. And it was explicitly modeled to give me an interface similar in capability to what Esent gives me, but in purely managed code.

Please note that is is released under the same license as RavenDB, AGPL.

Comments

I like the name you selected

So, when will we see "Raven.Hugin" :-)

Great news :)

Alk.

So Ravens are the new Rhinos then?

You play Eve? :)

Do you also plan to replace Esent in current projecs like Rhino Queues and Rhino ESB with Munin? Saving sagas in Munin (and outside your domain database) might be a good use..

You mentioned, that for large scale applications, ESENT would be better suited, because of the fact that Munin stores keys in memory.

What scenario are you targeting with Munin (data volume wise)?

Louis,

We currently use ~7 MB per 10,000 documents.

In other words, if you want to store 1,000,000 it would cost you 0.7 GB.

We can probably optimize it further, and the nature of the data structure that we means that we can handle paging of the in memory index pretty well.

Esent does much better once you go over tens of millions of documents, but until then, we are good.

Dave,

I don't plan to do it, but someone else might

__Munin name is already used in well-known (popular) server/cloud monitoring application.

http://munin-monitoring.org/

-google- results a bit problematic in the future?

Esent is very slow with bulk insert; PersistentDictionary based on esent is very very slow compared to Raven.ManagedStorage.Degenerate

Dave,

The license would be problematic for existing queues and esb users. It would essentially require them to purchase a raven db license and I don't think they would appreciate that. I would love to do the work if that were not the case.

cowgaR,

Munin isn't intended to be a separate product.

Tonio,

Actually, that depends on a lot of factors.

Esent is actually faster than Munin for bulk insert, because of the way we use it.

It is maybe too soon to write about it on such high profile blog, but let's try... I am trying also write managed key value db with ACID and MVCC, but it has much lower level interface compared to Munin. I would definitely liked to know if somebody finds it interesting.

https://github.com/Bobris/BTDB

Based on my yet limited understanding, this piece of software would work fairly well for persisting an Event Store...

I guess this thing ticks inside Raven MQ as well, in case you persist messages?

Frank,

Yes

Unfortunately, AGPL means that for the vast majority of situations in which I might consider deploying any of this, I can't.

(Same thing goes for RavenDB itself, really: the MongoDB approach to licensing (cross AGPL/Apache) [ http://blog.mongodb.org/post/103832439/the-agpl] is much more palatable and conducive to wider adoption.)

Shame, as it looks pretty cool...

Royston,

I am aware of that, but I am more interesting in getting enough adoption to get people to pay for it than having total high adoption

@Royston,

The real question is whether Ayende's interpretation of the AGPL license being used is right?

Several other AGPL licensed apps clearly indicate that only mods to the core are what is being protected. For example both MongoDB and CiviCRM clearly interpret the AGPL as covering only the core app/db and not any application you develop that connects through the API.

Despite the explanation in an earlier blogpost on the issue it is hard to conceive how the license actually is any different for RavenDB, Munin than these.

Comment preview