Map / Reduce – A visual explanation

Map/Reduce is a term commonly thrown about these days, in essence, it is just a way to take a big task and divide it into discrete tasks that can be done in parallel. A common use case for Map/Reduce is in document database, which is why I found myself thinking deeply about this.

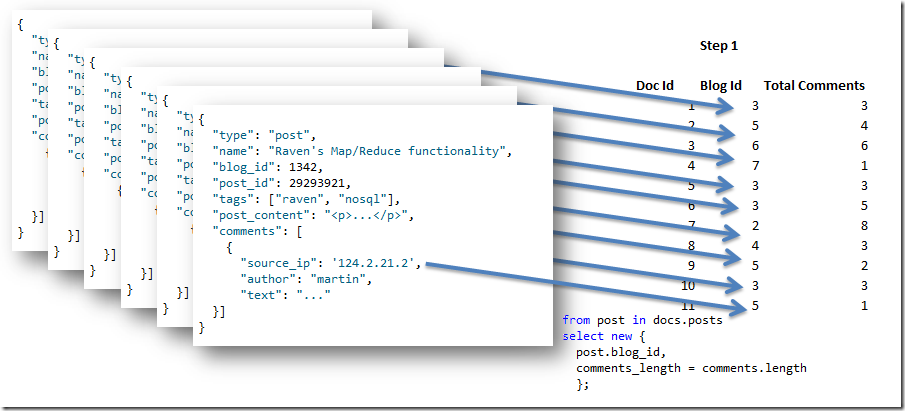

Let us say that we have a set of documents with the following form:

{

"type": "post",

"name": "Raven's Map/Reduce functionality",

"blog_id": 1342,

"post_id": 29293921,

"tags": ["raven", "nosql"],

"post_content": "<p>...</p>",

"comments": [

{

"source_ip": '124.2.21.2',

"author": "martin",

"text": "..."

}]

}

And we want to answer a question over more than a single document. That sort of operation requires us to use aggregation, and over large amount of data, that is best done using Map/Reduce, to split the work.

Map / Reduce is just a pair of functions, operating over a list of data. In C#, LInq actually gives us a great chance to do things in a way that make it very easy to understand and work with. Let us say that we want to be about to get a count of comments per blog. We can do that using the following Map / Reduce queries:

from post in docs.posts select new { post.blog_id, comments_length = comments.length }; from agg in results group agg by agg.key into g select new { agg.blog_id, comments_length = g.Sum(x=>x.comments_length) };

There are a couple of things to note here:

- The first query is the map query, it maps the input document into the final format.

- The second query is the reduce query, it operates over a set of results and produces an answer.

- Note that the reduce query must return its result in the same format that it received it, why will be explained shortly.

- The first value in the result is the key, which is what we are aggregating on (think the group by clause in SQL).

Let us see how this works, we start by applying the map query to the set of documents that we have, producing this output:

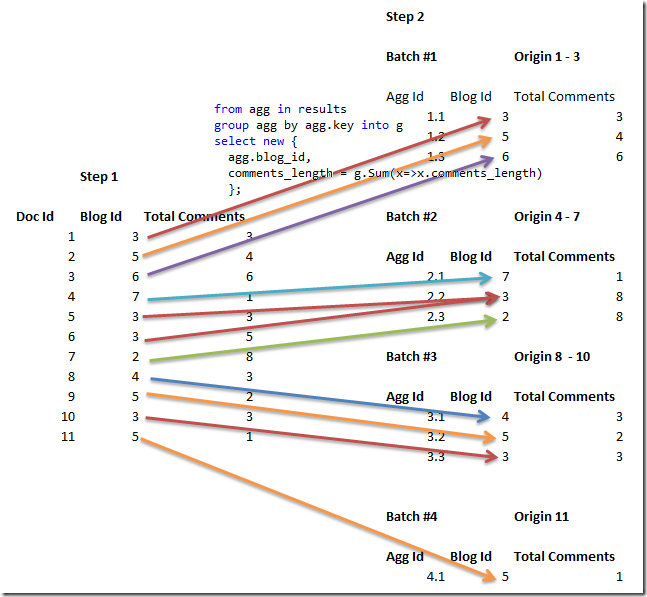

The next step is to start reducing the results, in real Map/Reduce algorithms, we partition the original input, and work toward the final result. In this case, imagine that the output of the first step was divided into groups of 3 (so 4 groups overall), and then the reduce query was applied to it, giving us:

You can see why it was called reduce, for every batch, we apply a sum by blog_id to get a new Total Comments value. We started with 11 rows, and we ended up with just 10. That is where it gets interesting, because we are still not done, we can still reduce the data further.

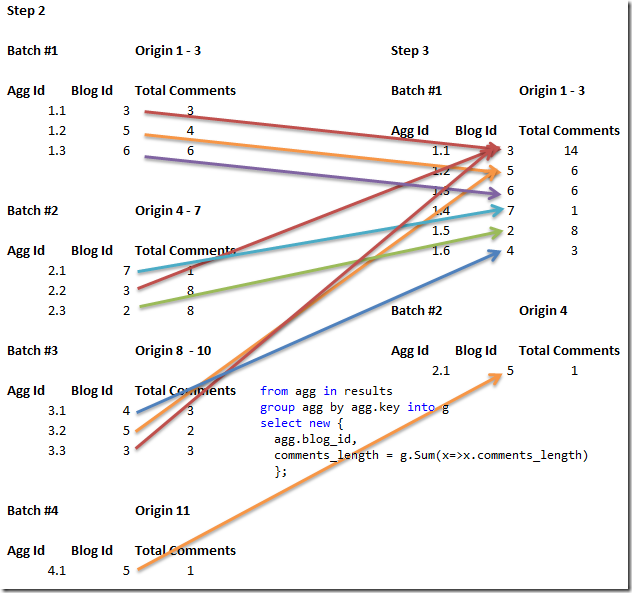

This is what we do in the third step, reducing the data further still. That is why the input & output format of the reduce query must match, we will feed the output of several the reduce queries as the input of a new one. You can also see that now we moved from having 10 rows to have just 7.

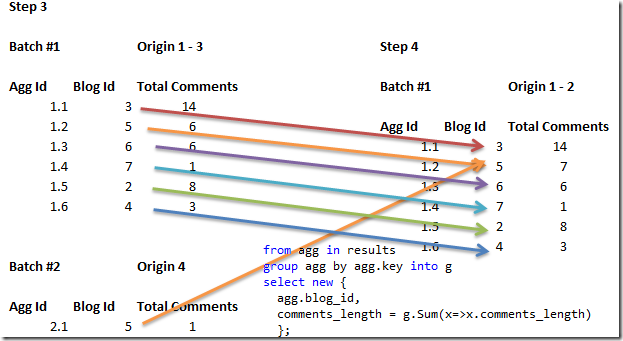

And the final step is:

And now we are done, we can't reduce the data any further because all the keys are unique.

There is another interesting property of Map / Reduce, let us say that I just added a comment to a post, that would obviously invalidate the results of the query, right?

Well, yes, but not all of them. Assuming that I added a comment to the post whose id is 10, what would I need to do to recalculate the right result?

- Map Doc #10 again

- Reduce Step 2, Batch #3 again

- Reduce Step 3, Batch #1 again

- Reduce Step 4

What is important is that I did not have to touch quite a bit of the data, making the recalculation effort far cheaper than it would be otherwise.

And that is (more or less) the notion of Map / Reduce.

Comments

hey!! thanks for the intro to map.reduce. heard about the term, never got around to investigating it. shall read more.

Thanks a lot - another buzzword demystified!

Great explaination.

Great visualization! What's interesting to me is that Map/Reduce will only work with aggregation operations that are associative (and commutative if allowed to be re-ordered) over the set of data.

I wonder how often that comes up? Using a summation is an obvious choice for map/reduce since addition is both associative and commutative.

However, a set of navigation instructions would probably not work well with map/reduce. I wonder what algorithms you would do then.

Haacked,

Average is a common thing to do that isn't easily associative.

You handle that by splitting the operation into two distinct operations, total count, and count of the values, both of which are associative, and then apply the final op in the end.

The fun part is that by its very nature, reduce requires you to perform the sort of operations that are either naturally that way, or can be composed of operations with this proeprty.

Calculating navigation is a weighted graph problem. That doesn't really lend itself to map/reduce solution set.

Look at tomorrow's post for more information about appropriate usage.

Great description and visualization of Map / Reduce. Really appreciate the LINQ example.

Original MapReduce paper by google (with graphical explanations as well)

http://labs.google.com/papers/mapreduce-osdi04.pdf

I think that'll make a great follow-up blog post. Map/Reduce is given so much attention now people tend to start thinking of it as a hammer for all nails. It'd be good to see where it is and isn't appropriate. :)

.Net 4 with PLINK would probably let you use this exact query and be parallel.

What part of "fork" and "join" don't we understand?

You might want to explain what you mean here

Good visualization.

Google made MapReduce famous, and most people think it's something really cool invented by Google.

MapReduce it's an old concept that belongs to Skeleton Programming Models, proposed by Murray Cole in 1989.

MapReduce is a Data parallel skeleton, because is data-centric parallelism (while pipeline/farm are called functional/stream parallel skeletons).

Just to mention, this is a page from 1993, a programming language (P3L), from the parallel programming group in Pisa: http://www.di.unipi.it/~susanna/p3lintro.html , look for MAP/REDUCE :)

...Google patented it :)

Map Reduce is the cloud version of multi-threading. It is not related to database queries.

"Note that the reduce query must return its result in the same format that it received it"

I don't think this is universally true of reduce functions. The basic reduce/fold function only specifices that a function is used to iterate over a list and build up a return value. The return value can be another list of items in the same structure, but it could also just be a primitive.

http://en.wikipedia.org/wiki/Fold_(higher-order_function)

"The folding of the list [1,2,3,4,5] with the addition operator would result in 15, the sum of the elements of the list [1,2,3,4,5]. To a rough approximation, one can think of this fold as replacing the commas in the list with the + operation, giving 1 + 2 + 3 + 4 + 5."

Specifically, the reduce function used by Google's algorithms (and others) may require that, but it is not the essense of reduce.

Dan,

It IS required if you want to be able to split the results and combine them later on.

In other words, if you need to run the reduce function over the results of the reduce function.

"And now we are done, we can reduce the data any further because all the keys are unique."

Did you mean "we can't" reduce?

More good coverage of Map/Reduce at http://nlp.stanford.edu/IR-book/pdf/04const.pdf page 9-13 of the PDF, or page 74-78 of the page numbers printed on the page. HTML version available starting with page nlp.stanford.edu/.../distributed-indexing-1.html

Great introduction to MapReduce.

As others have said map reduce is not new, and most modern RDBMS's incorporate a form of it.

For example MSSQL parallel execution plans with aggregates or CLR UDF's are essentially mapreduce.

Some RDBMS implementations can even scale out share nothing like a mapreduce cluster(Not MSSQL unfortunately, see Teradata).

Checkout: databasecolumn.vertica.com/.../mapreduce-a-majo... for a relational perspective on mapreduce.

Now I know what MapReduce is all about, Thanks for the heads up. Appreciate it!

Comment preview