Caching, the funny way

One of the most frustrating things in working with RavenDB is that the client API is compatible with the 3.5 framework. That means that for a lot of things we either have to use conditional compilation or we have to forgo using the new stuff in 4.0.

Case in point, we have the following issue:

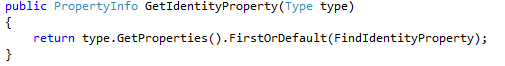

The code in question currently looks like this:

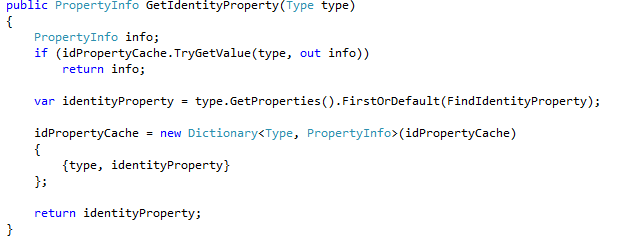

This is the sort of code that simply begs to be used with ConcurrentDictionary. Unfortunately, we can’t use that here, because of the 3.5 limitation. Instead, I went with the usual, non thread safe, dictionary approach. I wanted to avoid locking, so I ended up with:

Pretty neat, even if I say so myself. The fun part that without any locking, this is completely thread safe. The field itself is initialized to an empty dictionary in the constructor, of course, but that is the only thing that is happening outside this method. For that matter, I didn’t even bother to make the field volatile. The only thing that this relies on is that pointer writes are atomic.

How comes this works, and what assumptions am I making that makes this thing possible?

Comments

Summing up, not changing the read dictionary, replacing the read with a new one. That's the clue.

This solution is OK for cache, but you could lose some entries, written to the cache, between new Dictionary being published and old dictionary being copied over...

Couldn't this become a rather heavy operation if you have a great amount of types. Everytime this is called on a new type, the cache "expands" and creating the new dictionary instance takes more "time" I would think...

I also had the same thought as Konstantin, but indeed that's not critical for caching...

Konstantin, Bing! Exactly, we might lose cached entries, but we don't care about that, since this is a cache, losing values is all right.

To expand on what Konstantin said: You assume it doesn't matter if you lose a write to the cache. You assume it doesn't matter that you might 'generate' the identityProperty for the same type twice and not return the same value in those cases. (ConcurrentDictionary doesn't guarantee the generating a value only once, but does guarantee always returning the same value)

Besides that you assume the cache will never grow too large. Because by copying the dictionary every time you generate a value, you have yourself an O(n^2) operation there.

Meh, I guess I need to learn to type faster...

Koen, Yes, this is an O(N) operation, basically, where N is the number of types. Then again, when you consider how many types there are, it isn't really meaningful. There are about 13,000 types in the .NET framework, for example. O(N) of that is meaningless, and it is VERY unlikely that you'll get to that level

I believe there is a threading bug. The dictionary initializer will call the add method which throws on duplicate keys. And that can happen because you are repeatedly accessing the field which can change. The type you try to add could have just been added. Fix: Copy the field to a local first.

Tobi, Nice catch, yes, it is there.

Ayende,

When you add 1 item you have O(N) where N is the amount of items you already have. However, if you want to add N items it will be an O(N^2) operation.

For similar reasons people will become violent when you have:

string s = ""; for (int i = 0; i < 13000; i++) { s += (char) i; }You could argue that every iteration is just an O(N) operation, but that guy with the axe won't. ;-)

hmm, I guess I need to be more careful with < and > in messages.

For similar reasons people will become violent when you have:

string s = ""; for (int i = 0; i < 13000; i++) { s += (char) i; }You could argue that every iteration is just an O(N) operation, but that guy with the axe won't. ;-)

btw, the code looked fine in the preview at the bottom, so I hereby file that bug :)

@tobi, . Wouldn't your "copy to local" idea just cause new bugs? What happens two threads both copy to local variables, then add their respective types then both save. Only one of the two types would be saved. . This is nice if they both happened to be the same type as you suggest, but not so useful if they are different types. Still, I suppose it is thread safe though.

Mike, That is the exact point of having lost writes. In a cache, this is perfectly fine to do.

Patrick, You are correct, expect that we are usually talking about a N < 20, so even with N^20, the cost is negligible.

You are also making the assumption that the new dictionary will be initialized and in a valid state before it is published and visible to other threads.

This is not guaranteed for non-volatile fields. The optimizer (or the CPU cache) might re-order the statements and assign the new dictionary to idPropertyChange before the Add() method is called, or even before the constructor has finished running! You need a volatile write to ensure that the dictionary contents are visible to other processors before the reference to the dictionary becomes visible. However, this is currently only a theoretical problem - the current .NET implementations implicitly treat all writes as volatile. But processors other than x86/x64 often have weaker memory models where volatile writes don't come for free, so I wouldn't rely on all future .NET implementations to keep this behavior.

Daniel, Very good point, yes. I haven't considered that, memory models usually make my head hurt.

Earlier version of Rx for .net 3.5 contained backported System.Threading assembly. Last place I see them is here: http://code.google.com/p/rx-samples/source/browse/trunk/lib/Net35/?r=10

Also there are many nice LINQ in System.Interactive (also from .net 4)

I use the same approach in Json.NET for caching reflection data. It has been in the wild for years and I've yet to have any problems with it.

Have you done any empirical tests showing that recreating the dictionary is actually faster than the straightforward lock-before-adding approach?

There isn't just the cost of lock before adding, you also have to safe guard that a dictionary doesn't change while you're getting a value from it.

But no I've never done any performance tests around it.

There isn't just the cost of lock before adding, you also have to safe guard that a dictionary doesn't change while you're getting a value from it.

But no I've never done any performance tests around it.

Comment preview