Time series feature designReplication

Armed with the knowledge about replication strategies from my previous post, we can now consider this in the context of the time series database.

We actually have two distinct pieces of data that we track. We have the actual time data, the timestamp and value that we keep track of, and we have the series information (tags, mostly). We can consider using log shipping here, and that would give us a great way to get a read replica. But the question is why we would want to do that. It is nice to get a replica, but that replica would have to be read only. Is that useful? It could take over, if the master is down, but that would mean that the master would have to stay down (or converted to a slave). And divergent writes are a problem.

While attractive as a cheap way to handle replication, I don’t like this very much.

So that leaves us with using a multi write partners situation. In this case, we can allow the two servers to operate in tandem. We need to have some way to resolve conflicts, and this is where things gets a bit messy.

For series data, it is trivial to just use some form of last write wins. This assumes a synchronized clock between the servers, but we’ll leave that requirement for now.

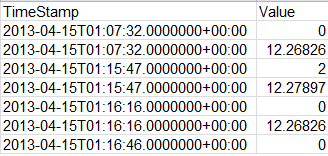

The problem is with the actual time data. Conceptually, we intend to store the information like this:

The problem is how do you detect conflicts. And are they really even possible. Let us assume that we want to update a particular value at time T on both servers. Server A replicates to server B, and now we need to decide how to deal with it. Ignore the value? Overwrite the value?

The important thing is that we need some predictable way to handle this that will end up with all the nodes in the cluster having the same agreed upon value. The simplest scenario, assuming a clock sync, is to use the write timestamp. But that would require us to keep the write time stamp. Currently we can use just 16 bytes for each time record. But recording the write timestamp will increase our usage to 24 bytes. That is a 50% increase just to handle conflicts. I don’t want to pay that.

The good thing about time series data is that a single value isn’t that important, and the likelihood that they will be changed it relatively small. We can just decide to say: We’ll choose a value, for example, we will choose the maximum value for that time, and be done with it. That has its own set of problems, but we’ll deal with that in a bit. We need to discuss how we deal with replication in general, first.

Let us imagine that we have 3 servers:

- Server A replicates to B

- Server B replicates to C

- Server C replicates to A

We have concurrent writes to the same time value on both server A and B. For the purpose of the discussion, let us assume that we have a way to resolve the conflict.

Server A notifies Server B about the change, but server B already have a different value for that. Conflict resolution is run, and we have a new value .That value need to be replicated down stream. It goes to Server C, who then replicate it to Server A, who then replicates it to Server B? Ad infinitum?

I intentionally chose this example, but the same thing can happen with just two servers replicating to one another (master/master). And the problem here is that in order to be able to actually track this properly, we are going to need to keep a lot of metadata around, per value. While I can sort of accept the need to keep the write time (thus requiring 50% more space per value), the idea of holding many times more metadata for replication purposes than the actual data we want to replicate seems… silly at best.

Log shipping replication it is, at least until someone can come up with a better way to resolve the issues above.

More posts in "Time series feature design" series:

- (04 Mar 2014) Storage replication & the bee’s knees

- (28 Feb 2014) The Consensus has dRafted a decision

- (25 Feb 2014) Replication

- (20 Feb 2014) Querying over large data sets

- (19 Feb 2014) Scale out / high availability

- (18 Feb 2014) User interface

- (17 Feb 2014) Client API

- (14 Feb 2014) System behavior

- (13 Feb 2014) The wire format

- (12 Feb 2014) Storage

Comments

Comment preview