B+Trees and why I love them, Part II – splitting hairs (and pages)

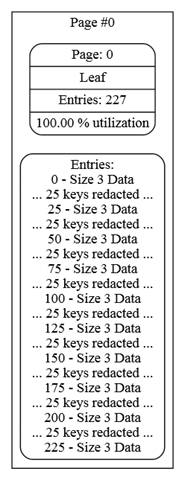

In the previous post, I gave a brief introduction about B+Trees. Now I want to talk about a crucial part of handling them. Let us start with the basic, we have the following tree, which consist of a single page:

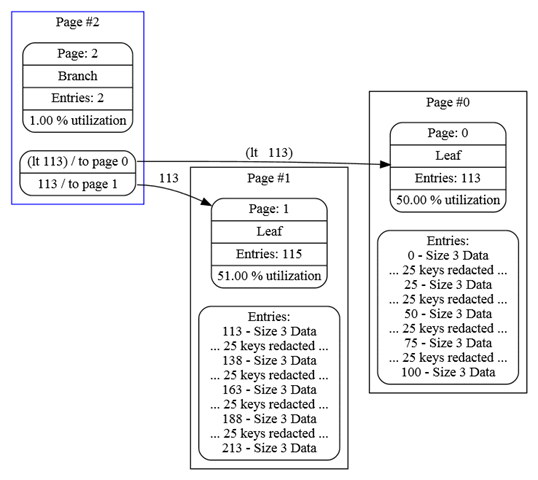

As you can imagine, attempting to add a single item to this page isn’t going to happen (there isn’t enough space), so we are going to have to something about it. That something is call page splitting. The easiest way to do that is to simply cut things in half, like so:

We have split the page, and now we are left with two pages that we can start writing to. Everyone is happy. Almost… in this case, you can see that we are doing sequential inserts. So page #0 is never going to have any additional data written to it. That means that we are wasting half this page!

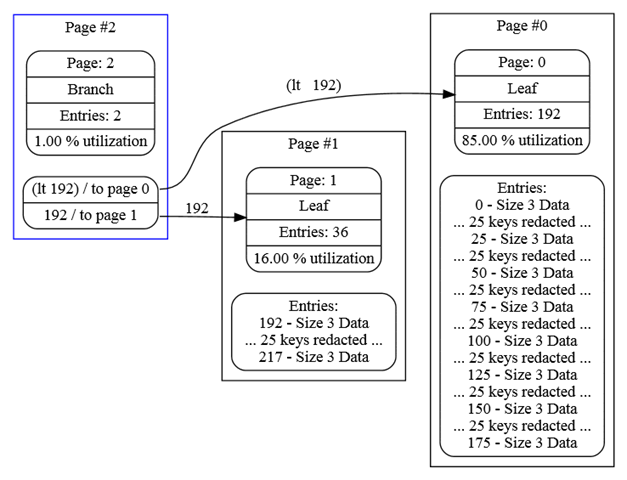

What I have done is to add just a tiny bit of smarts into the mix. Instead of doing a 50/50 split, we can detect if this is a sequential insert that is causing the split. If it is, we are going to split the data in a way that put more of it in the original page, like so:

We leave 85% of the data in the original page because that give some room to play with if one of the entries change there. This gives us a better page utilization in the common case of sequential inserts. But what about non sequential inserts? Well, if we detect that the page is being split because of a non sequential insert, we are going to do a 50/50 split, expecting that there would be additional non sequential inserts along the way.

I am not sure how useful that would be, but it seems like something that could be a nice optimization for a common case, and it requires nothing else from the user, we can do it all on our own.

Comments

Picky point: shouldn't it be 24 keys redacted?

Rob, yeah, You are correct.

If you were to update an entry in the new page #0, with a new value larger than can fit in that page, what happens? Do you do another 85% split, or 50/50? Would that node's entry become the first node in the new page or does it depend?

Brian, That depend where that entry is. If it is on the end, yes, it will be another bias split. If it is in the middle, it will be 50/50

Do you always split a page? How about checking to see if a sibling page has some extra space, then you can move the keys from one page to another and possibly save a split.

AFAIK all relational databases do such optimization already. SQL server will add a new, empty page instead of splitting when inserting keys in increasing sequence.

Kilman, Actually, the current implementation just create a new page. That isn't perfect, because if you do have another write later on to the original page, it will force a split. But that is fine for most purposes.

Systems that do special splits for b-tree appends (as you describe) often have a "fill-factor" which determines how full the last page in the b-tree should be before an append to that page causes a split. For example, JetCreateTable has a 'density' argument and Postgres index creation has a FILLFACTOR arguments.

Comment preview