Debugging memory issues with RavenDB using WinDBG

We had gotten a database export that showed a pretty rough case for RavenDB. A small set of documents (around 7K) that fan out in multiple map/reduce indexes to be around 70K to 180K (depending of which index is used).

As you can imagine, this puts quite a load on the system, I tried to use the usual methods (dotTrace, hand picking, etc) and we did get some good results on that, we found some pretty problematic issues along the way, and it was good. But we still had the RavenDB process take way too much memory. That means that we had to pull the big guns, WinDBG.

I took a dump of the process when it was using about 1 GB of memory, then I loaded that dump into WinDBG (6.2.9200.20512 AMD64).

I loaded SOS:

.loadby sos clr

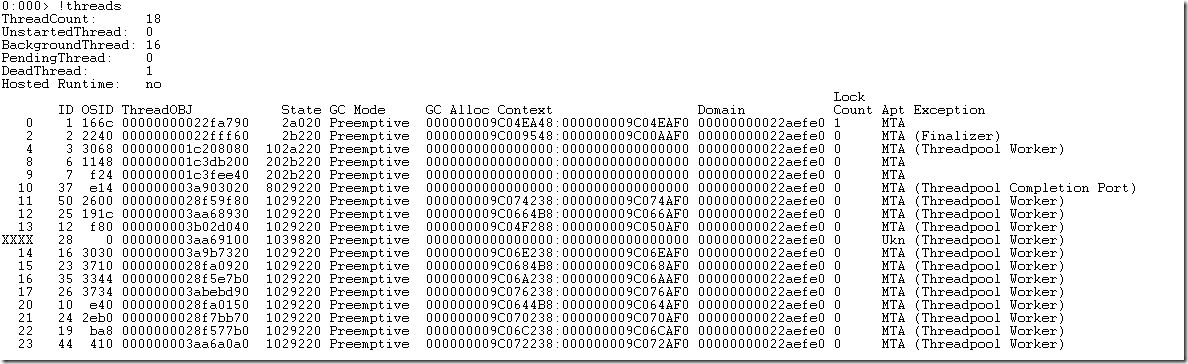

Then, the first thing to do was to try to see what is going on with the threads:

As you can see, we have a small number of threads, but nothing to write home about. The next step is to see if we have anything very big in the heap:

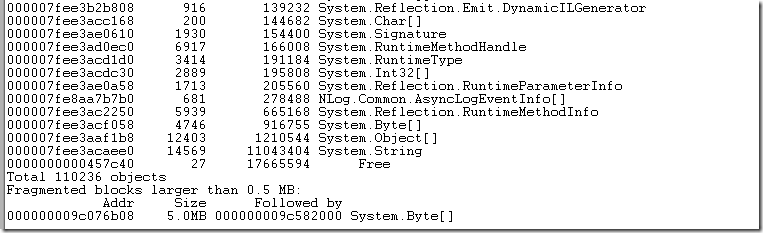

!dumpheap –stat

The first number is the method table address, the second is the count, and the third is the total size. The problem is that all of that combine doesn’t reach near as much memory as we take.

I guess it is possible that we hold a lot of data in the stack, especially since the problem is likely caused by indexing.

I decided to map all of the threads and see what they are doing.

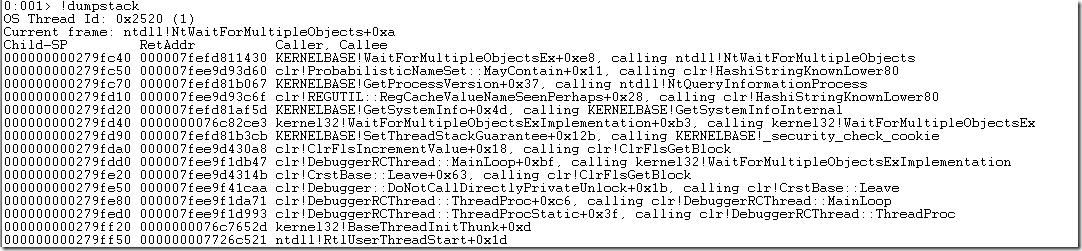

Switches me to thread #1, and we can see that we are currently in a waiting. Dumping the stack reveals:

This seems to be the debugger thread. Let us look at the rest:

- 2 – Finalizer

- 3 – Seems to be an inactive thread pool thread.

- 6 – appears to be an esent thread:

I am not sure what this is doing, and I am going to ignore this for now. - 7 – also esent thread:

And… I got tired of this, and decided that I wanted to do something more productive, which is to selectively disable things in RavenDB until I find something that drops the memory usage enough to be worth while.

Whack a mole might not be a great debugging tool, but it the essence of binary search. Or so I tell myself to make my conscience sleep more easily.

For reference, you can look at the dump file here.

Comments

http://memprofiler.com is a great memory profiler. See also http://stackoverflow.com/questions/399847/net-memory-profiling-tools and http://www.apress.com/9781430244585 for alternatives.

windbg is not really user friendly. Even helped by Tess's blog here http://blogs.msdn.com/b/tess/archive/tags/memory+issues/default.aspx?PageIndex=1 I can't figure it out.

Keep in mind !dumpheap shows the managed heap. It won't show you the unmanaged memory used by the process, and if you're using esent that points more fingers toward the issue being in unmanaged memory.

Loading the dmp and using !address -summary shows 1.15 Gb of committed memory, but as you note the problem does not seem to be in a managed heap since !dumpheap, !eeheap and !heapstat all show memory consumption less than 100 Mb.

This seems to indicate most memory is being allocated and used by unmanaged code.

You mentioned that you are using ESENT. Did you see another user ran into a problem with Managed ESENT that caused over 1 GB of memory to be consumed? http://managedesent.codeplex.com/discussions/276175

As your managed heap is very low, have you considered using DebugDiag's LeakTrack to try and catch native allocations that leak?

My immediate thought when I saw this was Esent too. Setting the cache parameters solved a similar problem for me. I believe there are actually some config options for this in RavenDB, but you probably know that ;)

Roman, I am pretty sure that the problem is not in managed code, so memprofiler isn't likely to help me here.

Chris, I am pretty sure it is an Esent issue, but I am also pretty sure that it isn't the cache. We added some diagnostics, and the cache takes ~400MB, so that isn't counting for a lot of the data, still investigating.

A couple of tools I've found helpful fast/easy/simple to use for memory investigation:

http://www.microsoft.com/en-us/download/details.aspx?id=16273

Much like the GitHub's situation with "the bridge loop problem that wasn't there" (https://github.com/blog/1346-network-problems-last-friday), I find it important to use the coarse-granularity tools available to help narrow down the problem (even if it's just to verify a 'hunch') before bringing out the tools like windbg :)

Of course, that's exactly what Ayende did here, of course, just reiterating that it's a great idea. :)

Ayende, I had to mention that memprofiler partially analyses non-managed code. See http://memprofiler.com/OnlineDocs, "Native Memory Page" section. There is no guarantee that it will solve your particular issue, but it is free to try.

As for the book, it doesn't cover memprofiler in any detail, but provides several good strategies for memory profiling in general. Not sure they will be new for you, though.

Note that using memprofiler with unmanaged resources tracker enabled to profile your process will give you more information than using it to analyze a dump file.

You should create several dumps while the memory is growing to see a pattern in one of the threads to see where it is actually allocating something. Only by looking at the threads while they are not allocating will not lead the way. With VMMap from SysInternals you can have a look at the contents and newly allocated stuff as well. That can also give some clue what the stuff taking so much space actually is.

Ayende, have you tried to compare memory usage of ESENT vs Munin?

Comment preview