RavenDBSelf optimizing Ids

One of the things that is really important for us in RavenDB is the notion of Safe by Default and Zero Admin. What this means is that we want to make sure that you don’t really have to think about what you are doing for the common cases, RavenDB will understand what you mean and figure out what is the best way to do things.

One of the cases where RavenDB does that is when we need to generate new ids. There are several ways to generate new ids in RavenDB, but the most common one, and the default, is to use the hilo algorithm. It basically (ignoring concurrency handling) works like this:

var currentMax = GetMaxIdValueFor("Disks"); var limit = currentMax + 32; SetMaxIdValueFor("Disks");

And now we can generate ids in the range of currentMax to currentMax+32, and we know that no one else can generate those ids. Perfect!

The good thing about it is that now we have a reserved range, we can create ids without going to the server. The bad thing about it is that we now reserved a range of 32. If we create just one or two documents and then restart, we would need to request a new range, and the rest of that range would be lost. That is why the default range value is 32. It is small enough that gaps aren’t that important*, but it since in most applications, you usually create entities on an infrequent basis and when you do, you usually generate just one, then it is big enough to still provide a meaningful optimization with regards to the number of times you have to go to the server.

* What does it means, “gaps aren’t important”? The gaps are never important to RavenDB, but people tend to be bothered when they see disks/1 and disks/2132 with nothing in the middle. Gaps are only important for humans.

So this is perfect for most scenarios. Except one very common scenario, bulk import.

When you need to load a lot of data into RavenDB, you will very quickly note that most of the time is actually spent just getting new ranges. More time than actually saving the new documents takes, in fact.

Now, this value is configurable, so you can set it to a higher value if you care for it, but still, that was annoying.

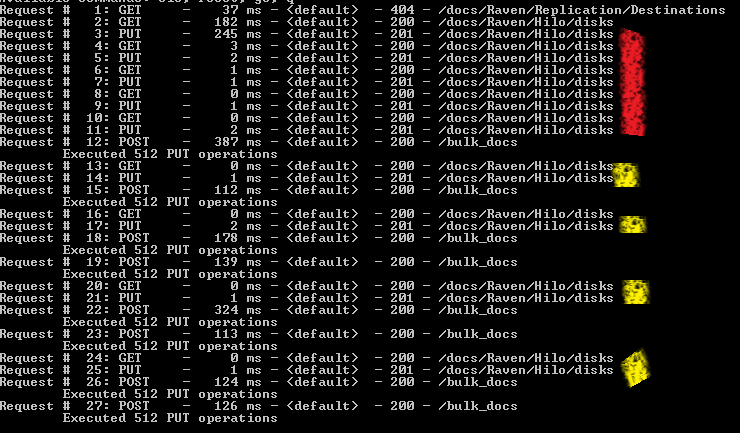

Hence, what we have now. Take a look at the log below:

It details the requests pattern in a typical bulk import scenario. We request an id range for disks, and then we request it again, and again, and again.

But, notice what happens as times goes by (and not that much time) before RavenDB recognizes that you need bigger ranges, and it gives you them. In fact, very quickly we can see that we only request a single range per batch, because RavenDB have optimized itself based on our own usage pattern.

Kinda neat, even if I say so myself.

More posts in "RavenDB" series:

- (11 Jul 2025) The Gen AI release

- (18 Mar 2025) One IO Ring to rule them all

- (19 Feb 2025) Clocking at 200 fsync/second

- (17 Feb 2025) Shared Journals

- (14 Feb 2025) Reclaiming disk space

- (12 Feb 2025) Write modes

- (10 Feb 2025) Next-Gen Pagers

Comments

What was the reason you didn't go with Comb Guid which wouldn't have the same problem? Performance of some kind or just personal preference?

Shane, Guid aren't human readable.

Does this generation of id's not conflict in a replicated enviroment?

Edward, You generally do not generate ids in this manner in a replicated env.

What would be the recommended way for generating human readable id's for a replicated enviroment?

Edward, Is it a master / master? Master / slave? If it is master slave, it is easy.

If it is master / master, you probably was to add the write server id to the id, to ensure uniqueness, something like users/1/1234

Its a master/master, could also be a master/master/master.

You mean users/serverId/1234 ?

Edward, Yes, that is the idea. Or you use a guid, but that isn't as readable.

Edward, I think it's a bad idea to have a multi-master db (where multi >2), I'm trying a master-multislave configuration with failover instead

Mauro, I do not (yet) have replication setups with RavenDB.

Can a slave in RavenDB also do CUD?

At this moment we have a few MSSQL DB's which have merge replication. These are master/master../master types which can also work in a disconnected enviroment (nice with crappy connections) and thus on different physical locations. Althought it works nice it is far from zero config and sometimes high maintenance.

Wow, Raven learns from recent usage patterns. Very cool.

So basically, you have to insert 32 documents at a time, and that is the only option other than looking at the last Id, and manually updating the Id integer for every document? Or you can query the whole collection for the last Id.. that's gotta be a better way.

Jesse, Huh? How did you get to that? What we are actually doing is optimizing ourselves on demand, so if you are inserting bulk data, we would reduce the number of requests to the server

Thank you for the fast reply! I understand that this could be handy for bulk inserts, but wouldn't the majority of inserts be 1 at a time? It's pretty annoying to have my Id's be 1, 32, 64, etc. And maybe I just need to look into this more before getting too frustrated, and maybe there's a simply way to do something like "id = getmaxid +1"

Jesse, We have this option, sure. See here: http://ravendb.net/docs/theory/document-key-generation

Comment preview